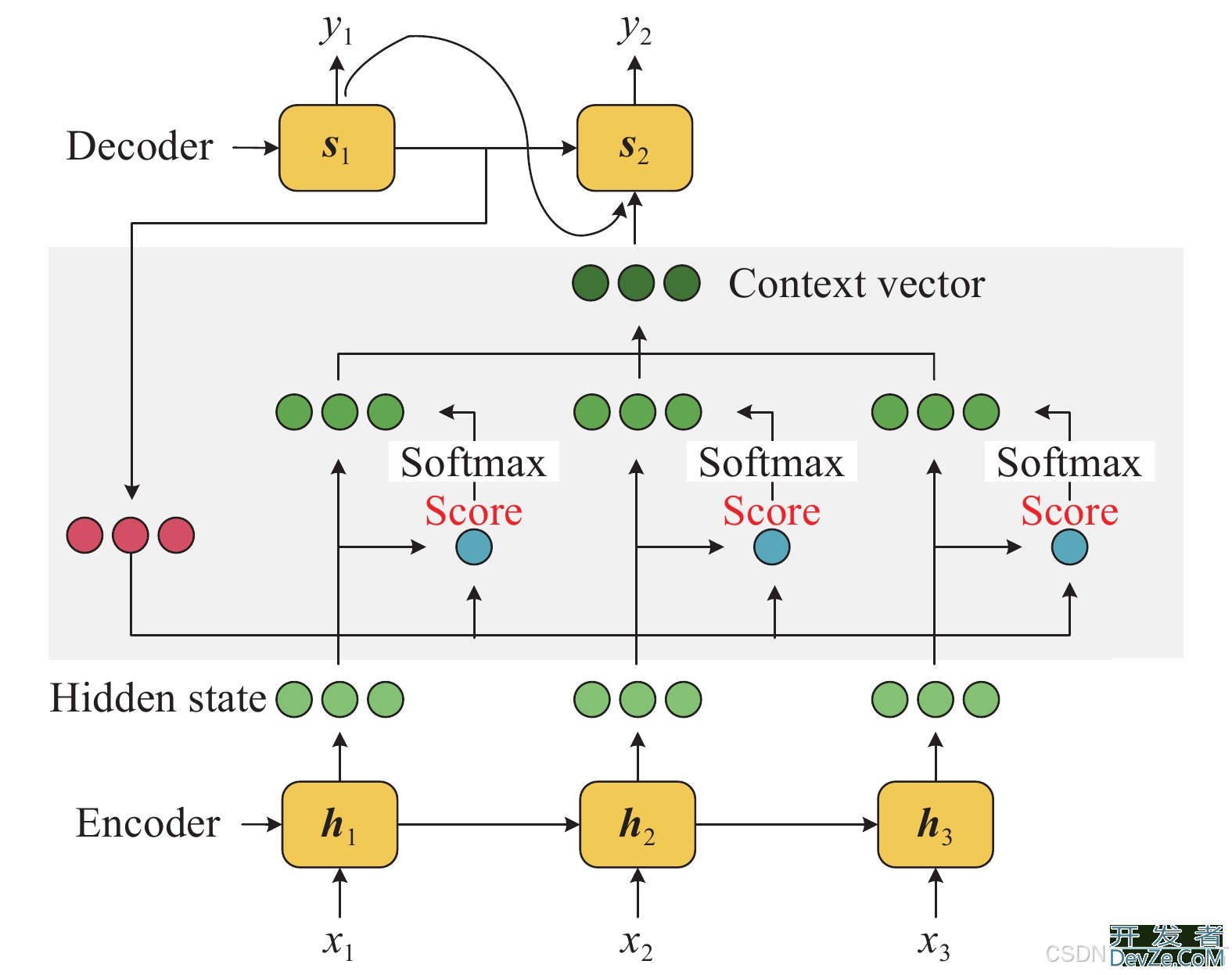

目录前言一、Attention机制的基本原理1.输入表示2.计算注意力权重3.加权求和4.输出5.实例代码二、Attention机制的类型1.Soft Attention2.Hard Attention3.Self-Attention4.Multi-Head Attention三、Attention机制的应

I need to delete all the lines on a subplot, to then redr开发者_如何学Pythonaw them (i\'m making a redraw function to be used when i add/remove some lines)