I\'m building an index of data, which will entail storing lots of triplets in the form (document, term, weight). I will be storing up to a few million such rows. Currently I\'m doing this in MySQL as

I want to use HBase as a store where I can push in a few million entries of the format {document => {term => weight}} e.g. \"Insert term X into document Y with weight Z\" and then issue a comman

What\'s a good method for assigning work to a set of remote machines?Consider an example where the task is very CPU and RAM intensive, but does开发者_Python百科n\'t actually process a large dataset.Th

I have in input, which could be a single primitive or a list or tuple of primitives. I\'d like to flatten it to just a list, like so:

Using only a mapper (a Python script) and no reducer, how can I output a separate file with the 开发者_JAVA百科key as the filename, for each line of output, rather than having long files of output?The

Is it possible to run Hadoop so that it only uses spare CPU cycles?I.e. would it be feasible to install Hadoop on peoples work machines so that number crunching can be done when they are not using the

Lots of \"BAW\"s (big ass-websites) are using data storage and retrieval t开发者_Go百科echniques that rely on huge tables with indexes, and using queries that won\'t/can\'t use JOINs in their queries

I need to write data in to Hadoop (HDFS) from external sources like a windows box. Right now I have been copying the data onto the namenode and using HDFS\'s put command to ingest it into the cluster.

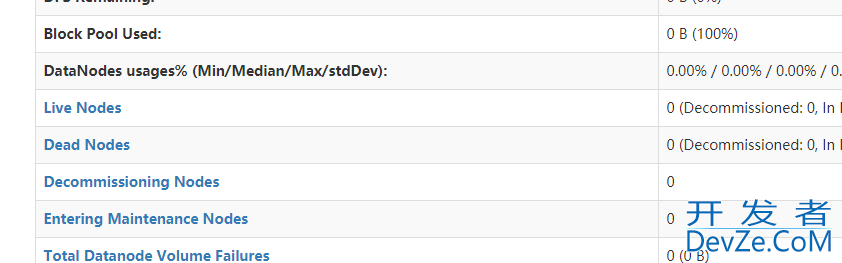

I Cloned the Bigtop code from Github, built RPMS, and installed the Hadoop cluster using Ambari. The Hadoop cluster had 3 nodes. When the name node HA is enabled from Ambari, the services do not star

这篇文章主要介绍了Hadoop3.2.0集群搭建常见注意事项,文中通过示例代码介绍的非常详细,对大家的学习或者工作具有一定的参考学习价值,需要的朋友可以参考下