目录IntentIntnet 种类Intent FilterIntent 将Activity 、Serivce 、 BroadReceive 之间通信 使用Intent

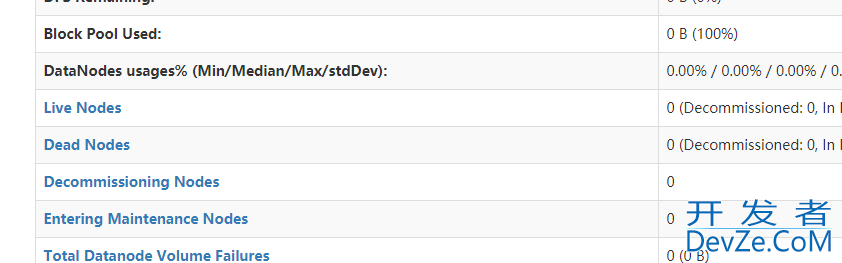

这篇文章主要介绍了Hadoop3.2.0集群搭建常见注意事项,文中通过示例代码介绍的非常详细,对大家的学习或者工作具有一定的参考学习价值,需要的朋友可以参考下

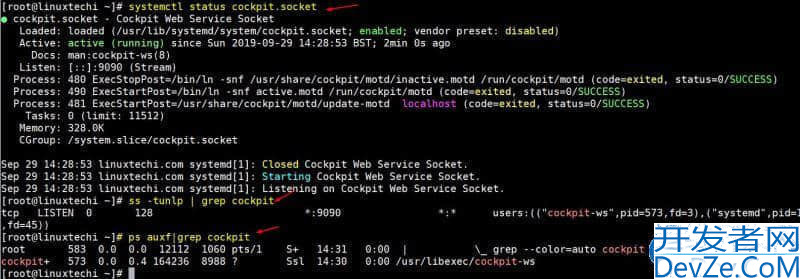

Cockpit 是一个基于 Web 的服务器管理工具,可用于 CentOS 和 RHEL 系统。最近发布的 CentOS 8 和 RHEL 8,其中 cockpit 是默认的服务器管理工具。这篇文章主要介绍了在 CentOS 8/RHEL 8 上安装和使用 Cockpit的方法,需要的朋友可以参考下