详解Pytorch中Dataset的使用

此案例教我们加载并处理TorchVision的FashionMNIST Dataset。

root 目录是 train/test data 存储的地方

download=True 如果root目录没有,则从网上下载

transform and target_transform specify the feature and label transformations

import torch

from torch.utils.data import Dataset

from torchvision import datasets

from torchvision.transforms import ToTensor

import matplotlib.pyplot as plt

training_data = datasets.FashionMNIST(

root="data",

train=True,

download=True,

http://www.devze.comtransform=ToTensor()

)

test_data = datasets.FashionMNIST(

root="data",

train=False,

download=True,

transform=ToTensor()

)

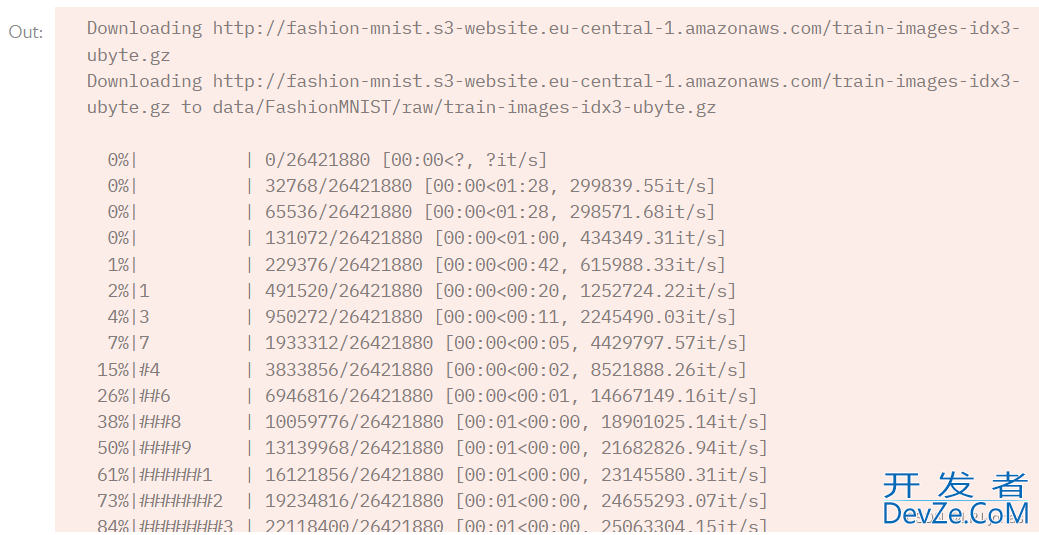

运行得到的结果是这样的:

遍历并可视化数据集

给数据集手动加上序号sample_idx,并用matplotlib进行绘制:

labels_map = {

0: "T-Shirt",

1: "Trouser",

2: "Pullover",

3: "Dress",

4: "Coat",

编程客栈 5: "Sandal",

6: "Shirt",

7: "Sneaker",

php 8: "Bag",

9: "Ankle Boot",

}

figure = plt.figure(figsize=(8, 8))

cols, rows = 3, 3

for i in range(1, cols * rows + 1):

sample_idx = torch.randint(len(training_data), size=(1,)).item()

img, label = training_data[sample_idx]

figure.add_subplot(rows, cols, i)

plt.title(labels_map[label])

plt.axis("off")

plt.imshow(img.squeeze(), cmap=android"gray")

plt.show()

traning_data

torch.randint(len(training_data), size=(1,)).item()

为我的文件自定义一个Dataset

一个自定义的Dataset必须有三个函数:__init__, __len__, and __getitem__

图片存储在img_dir

import os

import pandas as pd

from torchvision.io import read_image

class CustomImageDataset(Dataset):

def __init__(self, annotations_file, img_dir, transform=None, target_transform=No开发者_JAVA入门ne):

self.img_labels = pd.read_csv(annotations_file)

self.img_dir = img_dir

self.transform = transform

self.target_transform = target_transfohttp://www.devze.comrm

def __len__(self):

return len(self.img_labels)

def __getitem__(self, idx):

img_path = os.path.join(self.img_dir, self.img_labels.iloc[idx, 0])

image = read_image(img_path)

label = self.img_labels.iloc[idx, 1]

if self.transform:

image = self.transform(image)

if self.target_transform:

label = self.target_transform(label)

return image, label

到此这篇关于详解Pytorch中Dataset的使用的文章就介绍到这了,更多相关Pytorch Dataset使用内容请搜索我们以前的文章或继续浏览下面的相关文章希望大家以后多多支持我们!

加载中,请稍侯......

加载中,请稍侯......

精彩评论