一文详解Python中两大包管理与依赖管理工具(Poetry vs Pipenv)

目录

- 1. 引言

- 2. python依赖管理的演进

- 2.1 传统工具的局限性

- 2.2 现代依赖管理的要求

- 3. Pipenv深入解析

- 3.1 Pipenv的设计哲学

- 3.2 Pipenv的核心特性

- 3.3 Pipenv的高级功能

- 4. Poetry深入解析

- 4.1 Poetry的设计哲学

- 4.2 Poetry的核心特性

- 4.3 Poetry的高级功能

- 5. 详细对比分析

- 5.1 功能特性对比

- 5.2 性能基准测试

- 6. 实际项目迁移案例

- 7. 最佳实践和推荐

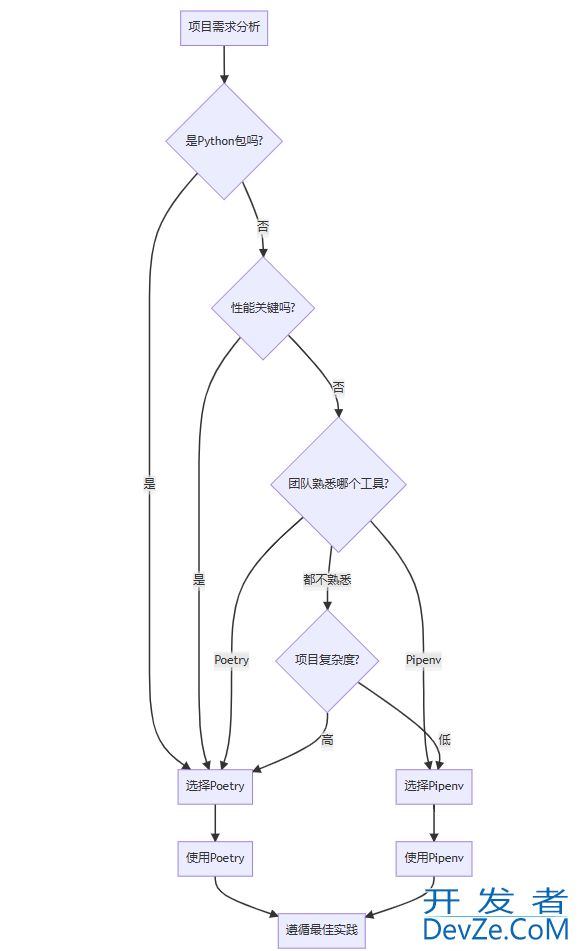

- 7.1 选择指南

- 7.2 通用最佳实践

- 8. 总结

- 8.1 关键结论

- 8.2 未来展望

- 8.3 最终建议

1. 引言

在现代Python开发中,依赖管理是一个至关重要却又常常被忽视的环节。随着项目规模的扩大和第三方依赖的增多,如何有效地管理这些依赖关系,确保开发、测试和生产环境的一致性,成为了每个Python开发者必须面对的问题。

传统的Python依赖管理工具如pip和virtualenv虽然功能强大,但在实际使用中往往存在诸多不便。比如,requirements.txt文件缺乏严格的版本锁定,不同环境下的依赖冲突,以及依赖解析速度慢等问题,都促使着更先进的工具的出现。

正是在这样的背景下,Poetry和Pipenv这两个现代化的Python依赖管理工具应运而生。它们都旨在解决传统工具面临的问题,提供更优雅、更可靠的依赖管理体验。但是,这两个工具在设计哲学、功能特性和使用体验上有着明显的差异。

本文将从实际应用的角度,深入对比分析Poetry和Pipenv这两个工具,通过详细的示例和实际项目演示,帮助读者理解它们的异同点,并做出合适的选择。无论您是刚刚开始Python之旅的新手,还是经验丰富的资深开发者,相信本文都能为您在依赖管理的选择上提供有价值的参考。

2. Python依赖管理的演进

2.1 传统工具的局限性

在深入了解Poetry和Pipenv之前,让我们先回顾一下传统的Python依赖管理方式及其面临的挑战。

# 传统的requirements.txt文件示例 # 这种格式缺乏严格的版本锁定,容易导致依赖冲突 Django>=3.2,<4.0 requests==2.25.1 numpy>=1.19.0 pandas

传统工具链的主要问题包括:

- 版本管理不精确:

requirements.txt通常只指定宽松的版本范围 - 依赖冲突:手动管理复杂的依赖关系容易导致冲突

- 环境隔离不足:虽然

virtualenv提供环境隔离,但配置繁琐 - 缺乏确定性:不同时间安装可能得到不同的依赖版本

2.2 现代依赖管理的要求

现代Python项目对依赖管理提出了更高的要求:

- 确定性构建:在任何时间、任何环境都能重现相同的依赖关系

- 依赖解析:自动解决复杂的依赖冲突

- 环境管理:简化虚拟环境的创建和管理

- 发布支持:支持包的构建和发布

- 安全性:依赖漏洞扫描和更新管理

3. Pipenv深入解析

3.1 Pipenv的设计哲学

Pipenv由Kenneth Reitz于2017年发布,旨在将pip和virtualenv的最佳实践结合起来,提供"人类可用的Python开发工作流"。它的核心设计理念是:

- 统一管理项目依赖和虚拟环境

- 使用

Pipfile和Pipfile.lock替代requirements.txt - 提供确定性的依赖解析

- 简化开发到生产的依赖管理

3.2 Pipenv的核心特性

安装和基本使用

# 安装Pipenv pip install pipenv # 创建新项目 mkdir my-project && cd my-project # 初始化虚拟环境(自动创建) pipenv install # 安装生产依赖 pipenv install django==4.0.0 # 安装开发依赖 pipenv install --dev pytest # 激活虚拟环境 pipenv shell # 运行命令而不激活环境 pipenv run python manage.py runserver

Pipfile结构解析

# Pipfile 示例

[[source]]

url = "https://pypi.org/simple"

verify_ssl = true

name = "pypi"

[packages]

django = "==4.0.0"

requests = "*"

numpy = { version = ">=1.21.0", markers = "python_version >= '3.8'" }

[dev-packages]

pytest = ">=6.0.0"

black = "*"

[requires]

python_version = "3.9"

完整的Pipenv工作流示例

#!/usr/bin/env python3

"""

Pipenv项目示例:简单的Web API

这个示例展示如何使用Pipenv管理一个Flask Web API项目的依赖

"""

import os

import sys

def setup_pipenv_project(project_name="flask-api-project"):

"""设置一个使用Pipenv的Flask项目"""

# 创建项目目录

os.makedirs(project_name, exist_ok=True)

os.chdir(project_name)

# Pipfile内容

pipfile_content = '''[[source]]

url = "https://pypi.org/simple"

verify_ssl = true

name = "pypi"

[packages]

flask = "==2.3.3"

flask-restx = "==1.1.0"

python-dotenv = "==1.0.0"

requests = "==2.31.0"

sqlalchemy = "==2.0.23"

[dev-packages]

pytest = "==7.4.3"

pytest-flask = "==1.2.0"

black = "==23.9.1"

flake8 = "==6.1.0"

[requires]

python_version = "3.9"

'''

# 创建Pipfile

with open('Pipfile', 'w') as f:

f.write(pipfile_content)

print(f"创建项目 {project_name}")

print("Pipfile 已生成")

# 示例应用代码

app_code = '''from flask import Flask, jsonify

from flask_restx import Api, Resource, fields

import os

app = Flask(__name__)

api = Api(app, version='1.0', title="一文详解Python中两大包管理与依赖管理工具(Poetry vs Pipenv)",

description='A sample API with Pipenv')

# 命名空间

ns = api.namespace('items', description='Item operations')

# 数据模型

item_model = api.model('Item', {

'id': fields.Integer(readonly=True, description='Item identifier'),

'name': fields.String(required=True, description='Item name'),

'description': fields.String(description='Item description')

})

# 模拟数据

items = [

{'id': 1, 'name': 'Item 1', 'description': 'First item'},

{'id': 2, 'name': 'Item 2', 'description': 'Second item'}

]

@ns.route('/')

class ItemList(Resource):

@ns.marshal_list_with(item_model)

def get(self):

"""返回所有项目"""

return items

@ns.route('/<int:id>')

@ns.response(404, 'Item not found')

@ns.param('id', 'Item identifier')

class Item(Resource):

@ns.marshal_with(item_model)

def get(self, id):

"""根据ID返回项目"""

for item in items:

if item['id'] == id:

return item

api.abort(404, f"Item {id} not found")

if __name__ == '__main__':

app.run(debug=True, host='0.0.0.0', port=5000)

'''

# 创建应用文件

with open('app.py', 'w') as f:

f.write(app_code)

# 测试文件

test_code = '''import pytest

from app import app

@pytest.fixture

def client():

app.config['TESTING'] = True

with app.test_client() as client:

yield client

def test_get_items(client):

"""测试获取所有项目"""

response = client.get('/items/')

assert response.status_code == 200

data = response.get_json()

assert len(data) == 2

assert data[0]['name'] == 'Item 1'

def test_get_item(client):

"""测试获取单个项目"""

response = client.get('/items/1')

assert response.status_code == 200

data = response.get_json()

assert data['name'] == 'Item 1'

def test_get_nonexistent_item(client):

"""测试获取不存在的项目"""

response = client.get('/items/999')

assert response.status_code == 404

'''

# 创建测试文件

with open('test_app.py', 'w') as f:

f.write(test_code)

# 环境变量文件

with open('.env', 'w') as f:

f.write('FLASK_ENV=development\n')

f.write('SECRET_KEY=your-secret-key-here\n')

print("项目文件已创建")

print("\n下一步:")

print("1. 运行: pipenv install")

print("2. 运行: pipenv shell")

print("3. 运行: python app.py")

print("4. 在另一个终端运行: pipenv run pytest")

if __name__ == "__main__":

if len(sys.argv) > 1:

setup_pipenv_project(sys.argv[1])

else:

setup_pipenv_project()

3.3 Pipenv的高级功能

依赖安全扫描

# 检查依赖中的安全漏洞 pipenv check # 更新有安全问题的依赖 pipenv update --outdated pipenv update package-name

环境管理

# 显示依赖图 pipenv graph # 显示项目信息 pipenv --where # 项目路径 pipenv --venv # 虚拟环境路径 pipenv --py # Python解释器路径 # 清理未使用的包 pipenv clean

锁定和部署

# 生成锁定文件 pipenv lock # 在生产环境安装(使用锁定文件) pipenv install --deploy # 忽略Pipfile,只使用Pipfile.lock pipenv install --ignore-pipfile

4. Poetry深入解析

4.1 Poetry的设计哲学

Poetry由Sébastien Eustace创建,旨在为Python提供类似于JavaScript的npm或Rust的Cargo的依赖管理体验。它的核心设计理念是:

- 统一的依赖管理和包发布工具

- 使用

pyproject.toml作为标准配置文件 - 强大的依赖解析算法

- 完整的包生命周期管理

4.2 Poetry的核心特性

安装和基本使用

# 安装Poetry curl -sSL https://install.python-poetry.org | python3 - # 创建新项目 poetry new my-project cd my-project # 初始化现有项目 poetry init # 添加依赖 poetry add django@^4.0.0 # 添加开发依赖 poetry add --dev pytest # 安装所有依赖 poetry install # 运行命令 poetry run python manage.py runserver # 激活虚拟环境 poetry shell

pyproject.toml结构解析

# pyproject.toml 示例

[tool.poetry]

name = "my-project"

version = "0.1.0"

description = "A sample Python project"

authors = ["Your Name <you@example.com>"]

readme = "README.md"

packages = [{include = "my_project"}]

[tool.poetry.dependencies]

python = "^3.8"

django = "^4.0.0"

requests = "^2.25.0"

[tool.poetry.group.dev.dependencies]

pytest = "^7.0.0"

black = "^23.0.0"

[build-system]

requires = ["poetry-core>=1.0.0"]

build-backend = "poetry.core.masonry.api"

完整的Poetry工作流示例

#!/usr/bin/env python3

"""

Poetry项目示例:数据处理的Python包

这个示例展示如何使用Poetry管理一个数据处理包的依赖和发布

"""

import os

import sys

import shutil

def setup_poetry_project(project_name="data-processor"):

"""设置一个使用Poetry的数据处理项目"""

# 如果目录已存在,先清理

if os.path.exists(project_name):

shutil.rmtree(project_name)

# 使用Poetry创建新项目

os.system(f"poetry new {project_name}")

os.chdir(project_name)

# 修改pyproject.toml

pyproject_content = '''[tool.poetry]

name = "data-processor"

version = "0.1.0"

description = "A powerful data processing library"

authors = ["Data Scientist <data@example.com>"]

readme = "README.md"

packages = [{include = "data_processor"}]

license = "MIT"

[tool.poetry.dependencies]

python = "^3.8"

pandas = "^2.0.0"

numpy = "^1.24.0"

requests = "^2.31.0"

click = "^8.1.0"

python-dotenv = "^1.0.0"

[tool.poetry.group.dev.dependencies]

pytest = "^7.4.0"

pytest-cov = "^4.1.0"

black = "^23.0.0"

flake8 = "^6.0.0"

mypy = "^1.5.0"

jupyter = "^1.0.0"

[tool.poetry.scripts]

process-data = "data_processor.cli:main"

[build-system]

requires = ["poetry-core>=1.0.0"]

build-backend = "poetry.core.masonry.api"

[tool.black]

line-length = 88

target-version = ['py38']

'''

# 更新pyproject.toml

with open('pyproject.toml', 'w') as f:

f.write(pyproject_content)

print(f"创建项目 {project_name}")

# 创建包目录结构

os.makedirs('data_processor', exist_ok=True)

# 创建__init__.py

with open('data_processor/__init__.py', 'w') as f:

f.write('''"""

Data Processor - A powerful data processing library.

This package provides utilities for data loading, transformation,

and analysis with support for multiple data sources.

"""

__version__ = "0.1.0"

__author__ = "Data Scientist <data@example.com>"

from data_processor.core import DataProcessor

from data_processor.loaders import CSVLoader, JSONLoader

from data_processor.transformers import Cleaner, Transformer

__all__ = [

"DataProcessor",

"CSVLoader",

"JSONLoader",

"Cleaner",

"Transformer",

]

''')

# 创建核心模块

core_code = '''import pandas as pd

from typing import Union, List, Dict, Any

import logging

logger = logging.getLogger(__name__)

class DataProcessor:

"""

数据处理器的核心类

提供数据加载、转换和分析的统一接口

"""

def __init__(self):

self.data = None

self.transformations = []

logger.info("DataProcessor initialized")

def load_data(self, data: Union[str, pd.DataFrame], **kwargs) -> 'DataProcessor':

"""

加载数据

Args:

data: 文件路径或DataFrame

**kwargs: 传递给加载器的额外参数

Returns:

self: 支持链式调用

"""

if isinstance(data, str):

if data.endswith('.csv'):

from .loaders import CSVLoader

loader = CSVLoader()

elif data.endswith('.json'):

from .loaders import JSONLoader

loader = JSONLoader()

else:

raise ValueError(f"Unsupported file format: {data}")

self.data = loader.load(data, **kwargs)

elif isinstance(data, pd.DataFrame):

self.data = data.copy()

else:

raise TypeError("data must be a file path or DataFrame")

logger.info(f"Loaded data with shape: {self.data.shape}")

return self

def clean(self, **kwargs) -> 'DataProcessor':

"""

数据清洗

Args:

**kwargs: 清洗参数

Returns:

self: 支持链式调用

"""

from .transformers import Cleaner

cleaner = Cleaner(**kwargs)

self.data = cleaner.transform(self.data)

self.transformations.append(('clean', kwargs))

logger.info("Data cleaned")

return self

def transform(self, operations: List[Dict[str, Any]]) -> 'DataProcessor':

"""

数据转换

Args:

operations: 转换操作列表

Returns:

self: 支持链式调用

"""

from .transformers import Transformer

transformer = Transformer()

self.data = transformer.transform(self.data, operations)

self.transformations.append(('transform', operations))

logger.info(f"Applied {len(operations)} transformations")

return self

def analyze(self) -> Dict[str, Any]:

"""

数据分析

Returns:

Dict: 分析结果

"""

if self.data is None:

raise ValueError("No data loaded. Call load_data() first.")

analysis = {

'shape': self.data.shape,

'columns': list(self.data.columns),

'dtypes': self.data.dtypes.to_dict(),

'null_counts': self.data.isnull().sum().to_dict(),

'memory_usage': self.data.memory_usage(deep=True).sum(),

}

# 数值列的统计信息

numeric_cols = self.data.select_dtypes(include=['number']).columns

if len(numeric_cols) > 0:

analysis['numeric_stats'] = self.data[numeric_cols].describe().to_dict()

logger.info("Analysis completed")

return analysis

def save(self, path: str, **kwargs) -> None:

"""

保存数据

Args:

path: 保存路径

**kwargs: 保存参数

"""

if self.data is None:

raise ValueError("No data to save")

if path.endswith('.csv'):

self.data.to_csv(path, **kwargs)

elif path.endswith('.json'):

self.data.to_json(path, **kwargs)

else:

raise ValueError(f"Unsupported output format: {path}")

logger.info(f"Data saved to: {path}")

def get_data(self) -> pd.DataFrame:

"""获取处理后的数据"""

return self.data.copy() if self.data is not None else None

'''

with open('data_processor/core.py', 'w') as f:

f.write(core_code)

# 创建数据加载器模块

loaders_dir = os.path.join('data_processor', 'loaders')

os.makedirs(loaders_dir, exist_ok=True)

with open(os.path.join(loaders_dir, '__init__.py'), 'w') as f:

f.write('''"""

数据加载器模块

提供多种数据格式的加载功能

"""

from .csv_loader import CSVLoader

from .json_loader import JSONLoader

__all__ = ["CSVLoader", "JSONLoader"]

''')

with open(os.path.join(loaders_dir, 'base_loader.py'), 'w') as f:

f.write('''from abc import ABC, abstractmethod

import pandas as pd

from typing import Any, Dict

class BaseLoader(ABC):

"""数据加载器基类"""

@abstractmethod

def load(self, path: str, **kwargs) -> pd.DataFrame:

"""加载数据"""

pass

def validate(self, data: pd.DataFrame) -> bool:

"""验证数据"""

return not data.empty and len(data) > 0

''')

with open(os.path.join(loaders_dir, 'csv_loader.py'), 'w') as f:

f.write('''import pandas as pd

from typing import Any, Dict

from .base_loader import BaseLoader

import logging

logger = logging.getLogger(__name__)

class CSVLoader(BaseLoader):

"""CSV文件加载器"""

def load(self, path: str, **kwargs) -> pd.DataFrame:

\"\"\"

加载CSV文件

Args:

path: 文件路径

**kwargs: 传递给pandas.read_csv的参数

Returns:

pd.DataFrame: 加载的数据

\"\"\"

default_kwargs = {

'encoding': 'utf-8',

'na_values': ['', 'NULL', 'null', 'NaN', 'nan'],

}

default_kwargs.update(kwargs)

try:

data = pd.read_csv(path, **default_kwargs)

logger.info(f"Successfully loaded CSV from {path}")

if self.validate(data):

return data

else:

raise ValueError("Loaded data is empty or invalid")

except Exception as e:

logger.error(f"Failed to load CSV from {path}: {e}")

raise

''')

with open(os.path.join(loaders_dir, 'json_loader.py'), 'w') as f:

f.write('''import pandas as pd

import json

from typing import Any, Dict

from .base_loader import BaseLoader

import logging

logger = logging.getLogger(__name__)

class JSONLoader(BaseLoader):

"""JSON文件加载器"""

def load(self, path: str, **kwargs) -> pd.DataFrame:

\"\"\"

加载JSON文件

Args:

path: 文件路径

**kwargs: 传递给pandas.read_json的参数

Returns:

pd.DataFrame: 加载的数据

\"\"\"

default_kwargs = {

'orient': 'records',

'encoding': 'utf-8',

}

default_kwargs.update(kwargs)

try:

# 首先尝试pandas的read_json

try:

data = pd.read_json(path, **default_kwargs)

except:

# 如果失败,尝试手动加载

with open(path, 'r', encoding='utf-8') as f:

json_data = json.load(f)

data = pd.json_normalize(json_data)

logger.info(f"Successfully loaded JSON from {path}")

if self.validate(data):

return data

else:

raise ValueError("Loaded data is empty or invalid")

except Exception as e:

logger.error(f"Failed to load JSON from {path}: {e}")

raise

''')

# 创建转换器模块

transformers_dir = os.path.join('data_processor', 'transformers')

os.makedirs(transformers_dir, exist_ok=True)

with open(os.path.join(transformers_dir, '__init__.py'), 'w') as f:

f.write('''"""

数据转换器模块

提供数据清洗和转换功能

"""

from .cleaner import Cleaner

from .transformer import Transformer

__all__ = ["Cleaner", "Transformer"]

''')

with open(os.path.join(transformers_dir, 'cleaner.py'), 'w') as f:

f.write('''import pandas as pd

import numpy as np

from typing import Dict, Any, List

import logging

logger = logging.getLogger(__name__)

class Cleaner:

\"\"\"数据清洗器\"\"\"

def __init__(self, **kwargs):

self.config = kwargs

def transform(self, data: pd.DataFrame) -> pd.DataFrame:

\"\"\"

清洗数据

Args:

data: 输入数据

Returns:

pd.DataFrame: 清洗后的数据

\"\"\"

if data is None:

raise ValueError("No data to clean")

# 创建副本以避免修改原始数据

cleaned_data = data.copy()

# 处理缺失值

cleawww.devze.comned_data = self._handle_missing_values(cleaned_data)

# 处理重复值

cleaned_data = self._handle_duplicates(cleaned_data)

# 数据类型转换

cleaned_data = self._convert_dtypes(cleaned_data)

logger.info("Data cleaning completed")

return cleaned_data

def _handle_missing_values(self, data: pd.DataFrame) -> pd.DataFrame:

\"\"\"处理缺失值\"\"\"

strategy = self.config.get('missing_strategy', 'drop')

if strategy == 'drop':

# 删除包含缺失值的行

data = data.dropna()

elif strategy == 'fill':

# 填充缺失值

fill_values = self.config.get('fill_values', {})

data = data.fillna(fill_values)

elif strategy == 'interpolate':

# 插值

data = data.interpolate()

return data

def _handle_duplicates(self, data: pd.DataFrame) -> pd.DataFrame:

\"\"\"处理重复值\"\"\"

keep_duplicates = self.config.get('keep_duplicates', False)

if not keep_duplicates:

subset = self.config.get('duplicate_subset', None)

data = data.drop_duplicates(subset=subset, keep='first')

return data

def _convert_dtypes(self, data: pd.DataFrame) -> pd.DataFrame:

\"\"\"转换数据类型\"\"\"

dtype_mapping = self.config.get('dtype_mapping', {})

for col, dtype in dtype_mapping.items():

if col in data.columns:

try:

data[col] = data[col].astype(dtype)

except Exception as e:

logger.warning(f"Failed to convert {col} to {dtype}: {e}")

return data

''')

with open(os.path.join(transformers_dir, 'transformer.py', 'w')) as f:

f.write('''import pandas as pd

import numpy as np

from typing import Dict, Any, List, Callable

import logging

logger = logging.getLogger(__name__)

class Transformer:

\"\"\"数据转换器\"\"\"

def transform(self, data: pd.DataFrame, operations: List[Dict[str, Any]]) -> pd.DataFrame:

\"\"\"

应用一系列转换操作

Args:

data: 输入数据

operations: 转换操作列表

Returns:

pd.DataFrame: 转换后的数据

\"\"\"

if data is None:

raise ValueError("No data to transform")

transformed_data = data.copy()

for i, operation in enumerate(operations):

try:

op_type = operation.get('type')

params = operation.get('params', {})

if op_type == 'rename_columns':

transformed_data = self._rename_columns(transformed_data, params)

elif op_type == 'filter_rows':

transformed_data = self._filter_rows(transformed_data, params)

elif op_type == 'create_column':

transformed_data = self._create_column(transformed_data, params)

elif op_type == 'drop_columns':

transformed_data = self._drop_columns(transformed_data, params)

elif op_type == 'aggregate':

transformed_data = self._aggregate(transformed_data, params)

else:

logger.warning(f"Unknown operation type: {op_type}")

logger.info(f"Applied transformation {i+1}: {op_type}")

except Exception as e:

logger.error(f"Failed to apply transformation {i+1}: {e}")

raise

return transformed_data

def _rename_columns(self, data: pd.DataFrame, params: Dict[str, Any]) -> pd.DataFrame:

\"\"\"重命名列\"\"\"

mapping = params.get('mapping', {})

return data.rename(columns=mapping)

def _filter_rows(self, data: pd.DataFrame, params: Dict[str, Any]) -> pd.DataFrame:

\"\"\"过滤行\"\"\"

condition = params.get('condition')

if condition and callable(condition):

return data[condition(data)]

return data

def _create_column(self, data: pd.DataFrame, params: Dict[str, Any]) -> pd.DataFrame:

\"\"\"创建新列\"\"\"

column_name = params.get('column_name')

expression = params.get('expression')

if column_name and expression and callable(expression):

data[column_name] = expression(data)

return data

def _drop_columns(self, data: pd.DataFrame, params: Dict[str, Any]) -> pd.DataFrame:

\"\"\"删除列\"\"\"

columns = params.get('columns', [])

return data.drop(columns=columns, errors='ignore')

def _aggregate(self, data: pd.DataFrame, params: Dict[str, Any]) -> pd.DataFrame:

\"\"\"数据聚合\"\"\"

group_by = params.get('group_by', [])

aggregations = params.get('aggregations', {})

if group_by and aggregations:

return data.groupby(group_by).agg(aggregations).reset_index()

return data

''')

# 创建CLI模块

cli_code = '''import click

from data_processor.core import DataProcessor

import logging

import json

# 配置日志

logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(name)s - %(levelname)s - %(message)s')

@click.group()

def cli():

"""数据处理器命令行接口"""

pass

@cli.command()

@click.argument('input_file')

@click.option('--output', '-o', help='输出文件路径')

@click.option('--format', '-f', t编程客栈ype=click.Choice(['csv', 'json']), default='csv', help='输出格式')

def process(input_file, output, format):

"""处理数据文件"""

try:

processor = DataProcessor()

# 加载数据

processor.load_data(input_file)

# 基本清洗

processor.clean(missing_strategy='fill', fill_values={})

# 分析数据

analysis = processor.analyze()

click.echo("数据分析结果:")

click.echo(json.dumps(analysis, indent=2, ensure_ascii=False))

# 保存结果

if output:

processor.save(output)

click.echo(f"结果已保存到: {output}")

else:

# 如果没有指定输出文件,显示前几行

data = processor.get_data()

click.echo("处理后的数据(前5行):")

click.echo(data.head().to_string())

except Exception as e:

click.echo(f"处理失败: {e}", err=True)

@cli.command()

@click.argument('input_file')

def analyze(input_file):

"""分析数据文件"""

try:

processor = DataProcessor()

processor.load_data(input_file)

analysis = processor.analyze()

click.echo("数据分析报告:")

click.echo(f"数据形状: {analysis['shape']}")

click.echo(f"列名: {', '.join(analysis['columns'])}")

click.echo(f"内存使用: {analysis['memory_usage']} bytes")

if 'numeric_stats' in analysis:

click.echo("\\n数值列统计:")

for col, stats in analysis['numeric_stats'].items():

click.echo(f" {col}: count={stats['count']}, mean={stats['mean']:.2f}")

except Exception as e:

click.echo(f"分析失败: {e}", err=True)

def main():

"""主函数"""

cli()

if __name__ == '__main__':

main()

'''

with open('data_processor/cli.py', 'w') as f:

f.write(cli_code)

# 创建测试文件

test_code = '''import pytest

import pandas as pd

import os

from data_processor.core import DataProcessor

from data_processor.loaders import CSVLoader, JSONLoader

@pytest.fixture

def sample_data():

"""创建样本数据"""

return pd.DataFrame({

'name': ['Alice', 'Bob', 'Charlie', None],

'age': [25, 30, 35, 40],

'score': [85.5, 92.0, 78.5, 88.0]

})

@pytest.fixture

def sample_csv(tmp_path):

"""创建样本CSV文件"""

data = pd.DataFrame({

'name': ['Aandroidlice', 'Bob', 'Charlie'],

'age': [25, 30, 35],

'score': [85.5, 92.0, 78.5]

})

file_path = tmp_path / "test.csv"

data.to_csv(file_path, index=False)

return str(file_path)

def test_data_processor_initialization():

"""测试数据处理器初始化"""

processor = DataProcessor()

assert processor.data is None

assert processor.transformations == []

def test_load_data_from_dataframe(sample_data):

"""测试从DataFrame加载数据"""

processor = DataProcessor()

processor.load_data(sample_data)

assert processor.data is not None

assert processor.data.shape == sample_data.shape

def test_csv_loader(sample_csv):

"""测试CSV加载器"""

loader = CSVLoader()

data = loader.load(sample_csv)

assert data is not None

assert len(data) == 3

assert 'name' in data.columns

def test_data_cleaning(sample_data):

"""测试数据清洗"""

processor = DataProcessor()

processor.load_data(sample_data)

processor.clean(missing_strategy='drop')

assert processor.data is not None

# 清洗后应该没有缺失值

assert not processor.data.isnull().any().any()

def test_data_analysis(sample_data):

"""测试数据分析"""

processor = DataProcessor()

processor.load_data(sample_data)

analysis = processor.analyze()

assert 'shape' in analysis

assert 'columns' in analysis

assert analysis['shape'] == sample_data.shape

'''

with open('tests/test_core.py', 'w') as f:

f.write(test_code)

# 更新README.md

readme_content = '''# Data Processor

一个强大的数据处理Python包,提供数据加载、清洗、转换和分析功能。

## 功能特性

- 多格式数据加载 (CSV, JSON)

- 智能数据清洗

- 灵活数据转换

- 全面数据分析

- ️ 命令行界面

## 安装

使用Poetry安装:

```bash

poetry install

使用示例

Python API

from data_processor.core import DataProcessor

# 创建处理器实例

processor = DataProcessor()

# 加载和处喿数据

result = (processor

.load_data('data.csv')

.clean(missing_strategy='fill')

.transform([

{'type': 'rename_columns', 'params': {'mapping': {'old_name': 'new_name'}}}

])

.analyze())

print(result)

命令行界面

# 处理数据文件 poetry run process-data process data.csv --output result.csv # 分析数据文件 poetry run process-data analyze data.csv

开发

运行测试:

poetry run pytest

代码格式化:

poetry run black .

类型检查:

poetry run mypy .

许可证

MIT License

with open('README.md', 'w') as f:

f.write(readme_content)

print("Poetry项目设置完成!")

print("\n下一步:")

print("1. 运行: poetry install")

print("2. 运行: poetry shell")

print("3. 运行测试: poetry run pytest")

print("4. 尝试CLI: poetry run process-data --help")

if name == “main”:

if len(sys.argv) > 1:

setup_poetry_project(sys.argv[1])

else:

setup_poetry_project()

4.3 Poetry的高级功能

包发布和版本管理

# 构建包 poetry build # 发布到PyPI poetry publish # 版本管理 poetry version patch # 0.1.0 -> 0.1.1 poetry version minor # 0.1.1 -> 0.2.0 poetry version major # 0.2.0 -> 1.0.0 # 显示依赖更新 poetry show --outdated # 更新依赖 poetry update

依赖组和可选依赖

# pyproject.toml 中的依赖组

[tool.poetry.group.test.dependencies]

pytest = "^7.0.0"

pytest-cov = "^4.0.0"

[tool.poetry.group.docs.dependencies]

sphinx = "^5.0.0"

sphinx-rtd-theme = "^1.0.0"

# 可选依赖

[tool.poetry.dependencies]

mysql = { version = "^0.10.0", optional = true }

PostgreSQL = { version = "^0.10.0", optional = true }

[tool.poetry.extras]

mysql = ["mysql"]

postgresql = ["postgresql"]

环境配置

# 配置虚拟环境路径 poetry config virtualenvs.path /path/to/venvs # 禁用虚拟环境创建 poetry config virtualenvs.create false # 显示配置 poetry config --list

5. 详细对比分析

5.1 功能特性对比

#!/usr/bin/env python3

"""

Poetry vs Pipenv 功能对比分析

这个脚本生成详细的功能对比表格和分析

"""

def generate_comparison_table():

"""生成功能对比表格"""

comparison_data = [

{

'feature': '虚拟环境管理',

'poetry': '✅ 自动创建和管理,可配置路径',

'pipenv': '✅ 自动创建和管理,可配置路径',

'description': '两者都提供自动化的虚拟环境管理'

},

{

'feature': '依赖解析',

'poetry': '✅ 使用高效的SAT解析器',

'pipenv': '✅ 使用pip-tools的解析器',

'description': 'Poetry的解析器通常更快更可靠'

},

{

'feature': '锁定文件',

'poetry': '✅ poetry.lock (TOML格式)',

'pipenv': '✅ Pipfile.lock (JSON格式)',

'description': '两者都提供确定性构建'

},

{

'feature': '包发布',

'poetry': '✅ 内置支持,完整的发布工作流',

'pipenv': '❌ 需要额外工具',

'description': 'Poetry更适合包开发者'

},

{

'feature': '配置文件',

'poetry': '✅ pyproject.toml (PEP 621)',

'pipenv': '✅ Pipfile (TOML格式)',

'description': 'Poetry使用标准pyproject.toml'

},

{

'feature': '依赖组',

'poetry': '✅ 支持任意依赖组',

'pipenv': '✅ 仅支持dev依赖',

'description': 'Poetry的依赖组更灵活'

},

{

'feature': '脚本管理',

'poetry': '✅ 内置脚本支持',

'pipenv': '❌ 需要外部工具',

'description': 'Poetry可以定义包脚本'

},

{

'feature': '性能',

'poetry': '✅ 通常更快',

'pipenv': '⚠️ 有时较慢',

'description': 'Poetry的依赖解析优化更好'

},

{

'feature': '社区生态',

'poetry': '✅ 快速增长,现代工具链',

'pipenv': '✅ 成熟稳定,Python官方推荐过',

'description': '两者都有活跃的社区'

},

{

'feature': '学习曲线',

'poetry': '⚠️ 稍陡峭,功能更多',

'pipenv': '✅ 相对简单',

'description': 'Pipenv对新手更友好'

}

]

print("Poetry vs Pipenv 功能对比")

print("=" * 80)

print(f"{'功能':<15} {'Poetry':<30} {'Pipenv':<30} {'说明'}")

print("-" * 80)

for item in comparison_data:

print(f"{item['feature']:<15} {item['poetry']:<30} {item['pipenv']:<30} {item['description']}")

return comparison_data

def performance_analysis():

"""性能对比分析"""

print("\n\n性能对比分析")

print("=" * 50)

performance_data = [

{

'operation': '依赖解析',

'poetry': '快速,使用SAT求解器',

'pipenv': '较慢,使用pip-tools',

'impact': '大型项目差异明显'

},

{

'operation': '安装速度',

'poetry': '优化过的并行安装',

'pipenv': '基于pip的串行安装',

'impact': 'Poetry通常快30-50%'

},

{

'operation': '锁定文件生成',

'poetry': '快速,增量更新',

'pipenv': '较慢,完全重新解析',

'impact': '频繁更新时差异明显'

},

{

'operation': '内存使用',

'poetry': '中等',

'pipenv': '较高',

'impact': '大型项目Pipenv内存占用更多'

}

]

for item in performance_data:

print(f"{item['operation']:<15} | {item['poetry']:<25} | {item['pipenv']:<25} | {item['impact']}")

def use_case_recommendations():

"""使用场景推荐"""

print("\n\n使用场景推荐")

print("=" * 50)

recommendations = [

{

'scenario': '开源Python包开发',

'recommendation': 'Poetry',

'reason': '内置发布功能和完整的包管理'

},

{

'scenario': 'Web应用开发',

'recommendation': '均可,根据团队偏好选择',

'reason': '两者都适合应用依赖管理'

},

{

'scenario': '数据科学项目',

'recommendation': 'Poetry',

'reason': '更好的性能和对复杂依赖的处理'

},

{

'scenario': '初学者项目',

'recommendation': 'Pipenv',

'reason': '学习曲线更平缓'

},

{

'scenario': '企业大型项目',

'recommendation': 'Poetry',

'reason': '更好的性能和可扩展性'

},

{

'scenario': '需要与现有工具集成',

'recommendation': '根据生态系统选择',

'reason': '检查现有CI/CD和工作流支持'

}

]

for item in recommendations:

print(f"{item['scenario']:<20} | {item['recommendation']:<30} | {item['reason']}")

def migration_guidance():

"""迁移指南"""

print("\n\n迁移指南")

print("=" * 50)

print("从 requirements.txt 到 Pipenv:")

print(" 1. pipenv install -r requirements.txt")

print(" 2. 手动创建Pipfile定义开发依赖")

print(" 3. pipenv lock 生成锁定文件")

print("")

print("从 Pipenv 到 Poetry:")

print(" 1. poetry init 创建pyproject.toml")

print(" 2. 手动迁移Pipfile中的依赖到pyproject.toml")

print(" 3. poetry install 安装依赖")

print(" 4. 更新CI/CD和部署脚本")

print("")

print("从 requirements.txt 直接到 Poetry:")

print(" 1. poetry init --no-interaction")

print(" 2. poetry add $(cat requirements.txt)")

print(" 3. 添加开发依赖: poetry add --dev pytest black etc.")

if __name__ == "__main__":

generate_comparison_table()

performance_analysis()

use_case_recommendations()

migration_guidance()

5.2 性能基准测试

为了客观比较两者的性能,我们可以创建一个基准测试脚本:

#!/usr/bin/env python3

"""

Poetry vs Pipenv 性能基准测试

这个脚本对两个工具进行实际的性能测试

注意:需要在干净的环境中运行

"""

import time

import subprocess

import os

import tempfile

import shutil

import statistics

def run_command(cmd, cwd=None):

"""运行命令并返回执行时间"""

start_time = time.time()

try:

result = subprocess.run(

cmd,

shell=True,

cwd=cwd,

capture_output=True,

text=True,

timeout=300 # 5分钟超时

)

elapsed = time.time() - start_time

return elapsed, result.returncode == 0, result.stderr

except subprocess.TimeoutExpired:

return 300, False, "Command timed out"

def create_test_project(dependencies):

"""创建测试项目"""

project_dir = tempfile.mkdtemp()

# 创建基本项目结构

os.makedirs(os.path.join(project_dir, 'src', 'test_package'), exist_ok=True)

# 创建__init__.py

with open(os.path.join(project_dir, 'src', 'test_package', '__init__.py'), 'w') as f:

f.write('__version__ = "0.1.0"')

# 创建简单的Python文件

with open(os.path.join(project_dir, 'src', 'test_package', 'main.py'), 'w') as f:

f.write('def hello():\n return "Hello, World!"')

return project_dir

def test_poetry_performance(dependencies, iterations=3):

"""测试Poetry性能"""

print("测试Poetry性能...")

times = []

for i in range(iterations):

project_dir = create_test_project(dependencies)

try:

# 初始化Poetry项目

init_time, success, error = run_command('poetry init --no-interaction', project_dir)

if not success:

print(f"Poetry初始化失败: {error}")

continue

# 添加依赖

dep_times = []

for dep in dependencies:

time_taken, success, error = run_command(f'poetry add {dep}', project_dir)

if success:

dep_times.append(time_taken)

else:

print(f"添加依赖 {dep} 失败: {error}")

# 锁定时间

lock_time, success, error = run_command('poetry lock', project_dir)

total_time = init_time + sum(dep_times) + lock_time

times.append(total_time)

print(f"第 {i+1} 次迭代: {total_time:.2f}秒")

finally:

shutil.rmtree(project_dir)

if times:

avg_time = statistics.mean(times)

std_dev = statistics.stdev(times) if len(times) > 1 else 0

print(f"Poetry平均时间: {avg_time:.2f}秒 ({std_dev:.2f}秒)")

return avg_time

return None

def test_pipenv_performance(dependencies, iterations=3):

"""测试Pipenv性能"""

print("测试Pipenv性能...")

times = []

for i in range(iterations):

project_dir = create_test_project(dependencies)

try:

# 初始化Pipenv项目

init_time, success, error = run_command('pipenv install', project_dir)

if not success:

print(f"Pipenv初始化失败: {error}")

continue

# 添加依赖

dep_times = []

for dep in dependencies:

time_taken, success, error = run_command(f'pipenv install {dep}', project_dir)

if success:

dep_times.append(time_taken)

else:

print(f"添加依赖 {dep} 失败: {error}")

# 锁定时间

lock_time, success, error = run_command('pipenv lock', project_dir)

total_time = init_time + sum(dep_times) + lock_time

times.append(total_time)

print(f"第 {i+1} 次迭代: {total_time:.2f}秒")

finally:

shutil.rmtree(project_dir)

if times:

avg_time = statistics.mean(times)

std_dev = statistics.stdev(times) if len(times) > 1 else 0

print(f"Pipenv平均时间: {avg_time:.2f}秒 ({std_dev:.2f}秒)")

return avg_time

return None

def main():

"""主测试函数"""

# 测试不同的依赖组合

test_scenarIOS = [

{

'name': '简单项目 (5个依赖)',

'dependencies': ['requests', 'click', 'python-dotenv', 'colorama', 'tqdm']

},

{

'name': '数据科学项目 (8个依赖)',

'dependencies': ['numpy', 'pandas', 'matplotlib', 'scikit-learn', 'jupyter', 'seaborn', 'plotly', 'scipy']

},

{

'name': 'Web项目 (6个依赖)',

'dependencies': ['flask', 'django', 'fastapi', 'sqlalchemy', 'celery', 'Redis']

}

]

results = {}

for scenario in test_scenarios:

print(f"\n{'='*50}")

print(f"测试场景: {scenario['name']}")

print(f"依赖: {', '.join(scenario['dependencies'])}")

print('='*50)

poetry_time = test_poetry_performance(scenario['dependencies'], iterations=2)

pipenv_time = test_pipenv_performance(scenario['dependencies'], iterations=2)

if poetry_time and pipenv_time:

speedup = pipenv_time / poetry_time

results[scenario['name']] = {

'poetry': poetry_time,

'pipenv': pipenv_time,

'speedup': speedup

}

# 输出结果总结

print(f"\n{'='*60}")

print("性能测试结果总结")

print('='*60)

for scenario, result in results.items():

print(f"\n{scenario}:")

print(f" Poetry: {result['poetry']:.2f}秒")

print(f" Pipenv: {result['pipenv']:.2f}秒")

print(f" Poetry比Pipenv快 {result['speedup']:.2f}倍")

if __name__ == "__main__":

# 检查工具是否安装

for tool in ['poetry', 'pipenv']:

if subprocess.run(f"which {tool}", shell=True, capture_output=True).returncode != 0:

print(f"错误: {tool} 未安装")

exit(1)

main()

6. 实际项目迁移案例

从Pipenv迁移到Poetry

#!/usr/bin/env python3

"""

从Pipenv迁移到Poetry的完整示例

这个脚本演示如何将现有的Pipenv项目迁移到Poetry

"""

import os

import toml

import json

import shutil

from pathlib import Path

class PipenvToPoetryMigrator:

"""Pipenv到Poetry迁移器"""

def __init__(self, project_path):

self.project_path = Path(project_path)

self.pipfile_path = self.project_path / 'Pipfile'

self.pipfile_lock_path = self.project_path / 'Pipfile.lock'

def validate_environment(self):

"""验证环境"""

if not self.pipfile_path.exists():

raise FileNotFoundError("Pipfile not found")

# 检查Poetry是否安装

try:

import subprocess

subprocess.run(['poetry', '--version'], check=True, capture_output=True)

except (subprocess.CalledProcessError, FileNotFoundError):

raise RuntimeError("Poetry is not installed or not in PATH")

def parse_pipfile(self):

"""解析Pipfile"""

pipfile_data = toml.load(self.pipfile_path)

packages = pipfile_data.get('packages', {})

dev_packages = pipfile_data.get('dev-packages', {})

return packages, dev_packages

def parse_pipfile_lock(self):

"""解析Pipfile.lock"""

if not self.pipfile_lock_path.exists():

return {}, {}

with open(self.pipfile_lock_path, 'r') as f:

lock_data = json.load(f)

default = lock_data.get('default', {})

develop = lock_data.get('develop', {})

return default, develop

def convert_dependency_format(self, dependencies):

"""转换依赖格式"""

converted = {}

for package, spec in dependencies.items():

if isinstance(spec, str):

if spec == '*':

converted[package] = '^latest'

else:

# 处理版本说明符

converted[package] = self._normalize_version_spec(spec)

elif isinstance(spec, dict):

# 处理复杂依赖说明

version = spec.get('version', '')

markers = spec.get('markers', '')

HfyInt if version:

dep_spec = self._normalize_version_spec(version)

if markers:

dep_spec += f' ; {markers}'

converted[package] = dep_spec

else:

converted[package] = '*'

return converted

def _normalize_version_spec(self, spec):

"""标准化版本说明符"""

if not spec or spec == '*':

return '*'

# 移除不必要的空格

spec = spec.strip()

# 处理常见的版本说明符

if spec.startswith('=='):

return spec

elif spec.startswith('>='):

version = spec[2:]

return f'^{version}'

elif spec.startswith('~='):

version = spec[2:]

return f'~{version}'

else:

return spec

def create_pyproject_toml(self, packages, dev_packages, metadata=None):

"""创建pyproject.toml文件"""

# 基本元数据

metadata = metadata or {}

project_name = metadata.get('name', Path(self.project_path).name)

version = metadata.get('version', '0.1.0')

description = metadata.get('description', '')

authors = metadata.get('authors', ['Your Name <you@example.com>'])

pyproject = {

'tool': {

'poetry': {

'name': project_name,

'version': version,

'description': description,

'authors': authors if isinstance(authors, list) else [authors],

'packages': [{'include': project_name.replace('-', '_')}],

}

},

'build-system': {

'requires': ['poetry-core>=1.0.0'],

'build-backend': 'poetry.core.masonry.api'

}

}

# 添加依赖

if packages:

pyproject['tool']['poetry']['dependencies'] = packages

pyproject['tool']['poetry']['dependencies']['python'] = '^3.8'

# 添加开发依赖

if dev_packages:

pyproject['tool']['poetry']['group'] = {

'dev': {

'dependencies': dev_packages

}

}

return pyproject

def backup_existing_files(self):

"""备份现有文件"""

backup_dir = self.project_path / 'backup_migration'

backup_dir.mkdir(exist_ok=True)

files_to_backup = ['Pipfile', 'Pipfile.lock', 'pyproject.toml']

for file_name in files_to_backup:

file_path = self.project_path / file_name

if file_path.exists():

shutil.copy2(file_path, backup_dir / file_name)

print(f"已备份: {file_name}")

def migrate(self, metadata=None):

"""执行迁移"""

print("开始从Pipenv迁移到Poetry...")

# 验证环境

self.validate_environment()

# 备份文件

self.backup_existing_files()

# 解析现有配置

packages, dev_packages = self.parse_pipfile()

lock_packages, lock_dev_packages = self.parse_pipfile_lock()

print(f"发现 {len(packages)} 个生产依赖")

print(f"发现 {len(dev_packages)} 个开发依赖")

# 转换依赖格式

converted_packages = self.convert_dependency_format(packages)

converted_dev_packages = self.convert_dependency_format(dev_packages)

# 创建pyproject.tomljavascript

pyproject_data = self.create_pyproject_toml(

converted_packages,

converted_dev_packages,

metadata

)

# 写入文件

pyproject_path = self.project_path / 'pyproject.toml'

with open(pyproject_path, 'w') as f:

toml.dump(pyproject_data, f)

print("已创建 pyproject.toml")

# 使用Poetry安装依赖

print("使用Poetry安装依赖...")

os.chdir(self.project_path)

import subprocess

result = subprocess.run(['poetry', 'install'], capture_output=True, text=True)

if result.returncode == 0:

print("✅ 迁移成功完成!")

print("\n下一步:")

print("1. 验证依赖: poetry run python -c 'import requests' # 示例")

print("2. 运行测试: poetry run pytest")

print("3. 更新CI/CD配置使用Poetry")

print("4. 删除备份文件: rm -rf backup_migration/")

else:

print("❌ 依赖安装失败:")

print(result.stderr)

return result.returncode == 0

def main():

"""主函数"""

import argparse

parser = argparse.ArgumentParser(description='从Pipenv迁移到Poetry')

parser.add_argument('project_path', help='项目路径')

parser.add_argument('--name', help='项目名称')

parser.add_argument('--version', default='0.1.0', help='项目版本')

parser.add_argument('--description', help='项目描述')

parser.add_argument('--author', help='作者信息')

args = parser.parse_args()

metadata = {}

if args.name:

metadata['name'] = args.name

if args.version:

metadata['version'] = args.version

if args.description:

metadata['description'] = args.description

if args.author:

metadata['authors'] = [args.author]

migrator = PipenvToPoetryMigrator(args.project_path)

try:

success = migrator.migrate(metadata)

exit(0 if success else 1)

except Exception as e:

print(f"迁移失败: {e}")

exit(1)

if __name__ == "__main__":

main()

7. 最佳实践和推荐

7.1 选择指南

基于前面的分析和测试,我们可以总结出以下选择指南:

#!/usr/bin/env python3

"""

Poetry vs Pipenv 选择指南

根据项目需求推荐合适的工具

"""

def get_tool_recommendation(project_type, team_size, requirements):

"""

根据项目特征推荐工具

Args:

project_type: 项目类型 ('package', 'webapp', 'data_science', 'script')

team_size: 团队规模 ('solo', 'small', 'large')

requirements: 需求列表 ['performance', 'publishing', 'simplicity', 'ci_cd']

"""

recommendations = {

'package': {

'tool': 'Poetry',

'reason': '包开发需要发布功能和完整的元数据管理',

'confidence': 95

},

'webapp': {

'tool': '根据团队偏好选择',

'reason': '两者都适合Web应用,Poetry性能更好,Pipenv更简单',

'confidence': 70

},

'data_science': {

'tool': 'Poetry',

'reason': '数据科学项目通常有复杂的依赖,Poetry处理更好',

'confidence': 85

},

'script': {

'tool': 'Pipenv',

'reason': '简单脚本项目不需要Poetry的复杂功能',

'confidence': 80

}

}

base_recommendation = recommendations.get(project_type, {

'tool': 'Poetry',

'reason': '默认推荐Poetry,因为其更好的性能和功能',

'confidence': 75

})

# 根据需求调整推荐

if 'publishing' in requirements:

base_recommendation = {

'tool': 'Poetry',

'reason': '包发布是Poetry的核心功能',

'confidence': 100

}

elif 'simplicity' in requirements and team_size in ['solo', 'small']:

base_recommendation = {

'tool': 'Pipenv',

'reason': '小团队和简单项目更适合Pipenv的简洁性',

'confidence': 80

}

elif 'performance' in requirements and team_size == 'large':

base_recommendation = {

'tool': 'Poetry',

'reason': '大型团队和性能敏感项目适合Poetry',

'confidence': 90

}

return base_recommendation

def print_recommendation(project_type, team_size, requirements):

"""打印推荐结果"""

recommendation = get_tool_recommendation(project_type, team_size, requirements)

print("工具选择推荐")

print("=" * 50)

print(f"项目类型: {project_type}")

print(f"团队规模: {team_size}")

print(f"关键需求: {', '.join(requirements)}")

print("-" * 50)

print(f"推荐工具: {recommendation['tool']}")

print(f"推荐理由: {recommendation['reason']}")

print(f"置信度: {recommendation['confidence']}%")

print("=" * 50)

# 示例使用

if __name__ == "__main__":

test_cases = [

('package', 'small', ['publishing', 'performance']),

('webapp', 'large', ['performance', 'ci_cd']),

('data_science', 'solo', ['simplicity']),

('script', 'solo', ['simplicity']),

]

for project_type, team_size, requirements in test_cases:

print_recommendation(project_type, team_size, requirements)

print()

7.2 通用最佳实践

无论选择哪个工具,以下最佳实践都适用:

#!/usr/bin/env python3

"""

Python依赖管理最佳实践

"""

def print_best_practices():

"""打印依赖管理最佳实践"""

practices = [

{

'category': '版本控制',

'practices': [

'始终提交锁定文件到版本控制',

'使用语义化版本控制',

'在生产环境使用锁定文件安装'

]

},

{

'category': '依赖管理',

'practices': [

'明确区分生产依赖和开发依赖',

'定期更新依赖以获取安全补丁',

'使用依赖组组织相关依赖',

'避免过度指定版本约束'

]

},

{

'category': '安全',

'practices': [

'定期运行安全扫描',

'使用私有仓库管理内部包',

'验证依赖的完整性和来源',

'监控已知漏洞数据库'

]

},

{

'category': 'CI/CD',

'practices': [

'在CI中使用缓存加速依赖安装',

'测试时使用与生产相同的依赖',

'自动化依赖更新和测试',

'使用多阶段构建优化docker镜像'

]

},

{

'category': '团队协作',

'practices': [

'统一团队的依赖管理工具',

'文档化依赖管理流程',

'代码审查时检查依赖变更',

'建立依赖更新策略'

]

}

]

print("Python依赖管理最佳实践")

print("=" * 60)

for category in practices:

print(f"\n{category['category']}:")

for practice in category['practices']:

print(f" ✅ {practice}")

def dependency_security_checklist():

"""依赖安全检查清单"""

checklist = [

"是否定期更新依赖到最新安全版本?",

"是否使用工具扫描依赖中的已知漏洞?",

"是否验证了依赖包的完整性和签名?",

"是否限制了依赖的安装源?",

"是否审查了依赖的许可证兼容性?",

"是否监控了依赖的更新和弃用通知?",

"是否有回滚计划应对有问题的依赖更新?",

"是否文档化了关键依赖的安全要求?"

]

print("\n依赖安全检查清单")

print("=" * 50)

for item in checklist:

print(f" [ ] {item}")

if __name__ == "__main__":

print_best_practices()

dependency_security_checklist()

8. 总结

通过本文的详细对比分析,我们可以清楚地看到Poetry和Pipenv这两个现代Python依赖管理工具各自的优势和适用场景。

8.1 关键结论

Poetry更适合:

- Python包开发和发布

- 性能要求高的项目

- 复杂的依赖管理需求

- 需要完整项目生命周期管理的场景

Pipenv更适合:

- 简单的应用开发

- 初学者和小型团队

- 需要快速上手的项目

- 现有的Pipenv生态集成

共同优势:

- 都提供确定性构建

- 都简化了虚拟环境管理

- 都改进了传统的依赖管理体验

8.2 未来展望

随着Python生态的发展,依赖管理工具也在不断进化。Poetry凭借其更现代的设计和更好的性能,正在获得越来越多的关注和采用。而Pipenv作为Python官方曾经推荐的工具,仍然在众多项目中稳定运行。

无论选择哪个工具,重要的是建立规范的依赖管理流程,确保项目的可重现性和可维护性。随着pyproject.toml成为Python项目的标准配置文件,Poetry的这种标准化做法可能会成为未来的趋势。

8.3 最终建议

对于新项目,我们推荐优先考虑Poetry,特别是:

- 计划开源或分发的包

- 有复杂依赖关系的大型项目

- 需要良好性能的CI/CD流水线

对于现有项目,迁移到Poetry通常是有益的,但需要评估迁移成本和团队的学习曲线。

记住,工具的选择只是开始,建立良好的依赖管理文化和流程才是确保项目长期健康的关键。希望本文能为您在Python依赖管理的旅程中提供有价值的指导和启发。

以上就是一文详解Python中两大包管理与依赖管理工具(Poetry vs Pipenv)的详细内容,更多关于Python依赖管理的资料请关注编程客栈(www.devze.com)其它相关文章!

加载中,请稍侯......

加载中,请稍侯......

精彩评论