Win10 IDEA远程连接HBase教程

目录

- Win10 IDEA远程连接HBase

- 关闭Hadoop和Hbase

- 获取虚拟机的ip

- 关闭虚拟机防火墙

- 修改Hadoop的core-site.XML文件

- 修改Hbase的hbase-site.xml

- 打开Hadoop和Hbase

- IDEA 连接

- 创建Maven项目

- 添加依赖(pom.xml)

- 创建Java文件并运行

- 其他

- HBase Shell命令

- Java API

- 总结

Win10 IDEA远程连接HBase

Win10 IDEA连接虚拟机中的Hadoop(HDFS)

关闭Hadoop和Hbase

如果已经关闭不需要走这一步

cd /usr/local/hbase bin/stop-hbase.sh cd /usr/local/hadoop ./sbin/stop-dfs.sh

获取虚拟机的ip

虚拟机终端输入

ip a

关闭虚拟机防火墙

sudo ufw disable

修改Hadoop的core-site.xml文件

将IP修改成自己的IP

# 位置可能不一样,和Hadoop安装位置有关 cd /usr/local/hadoop/etc/hadoop vim core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://192.168.111.135:9000</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

</configuration>

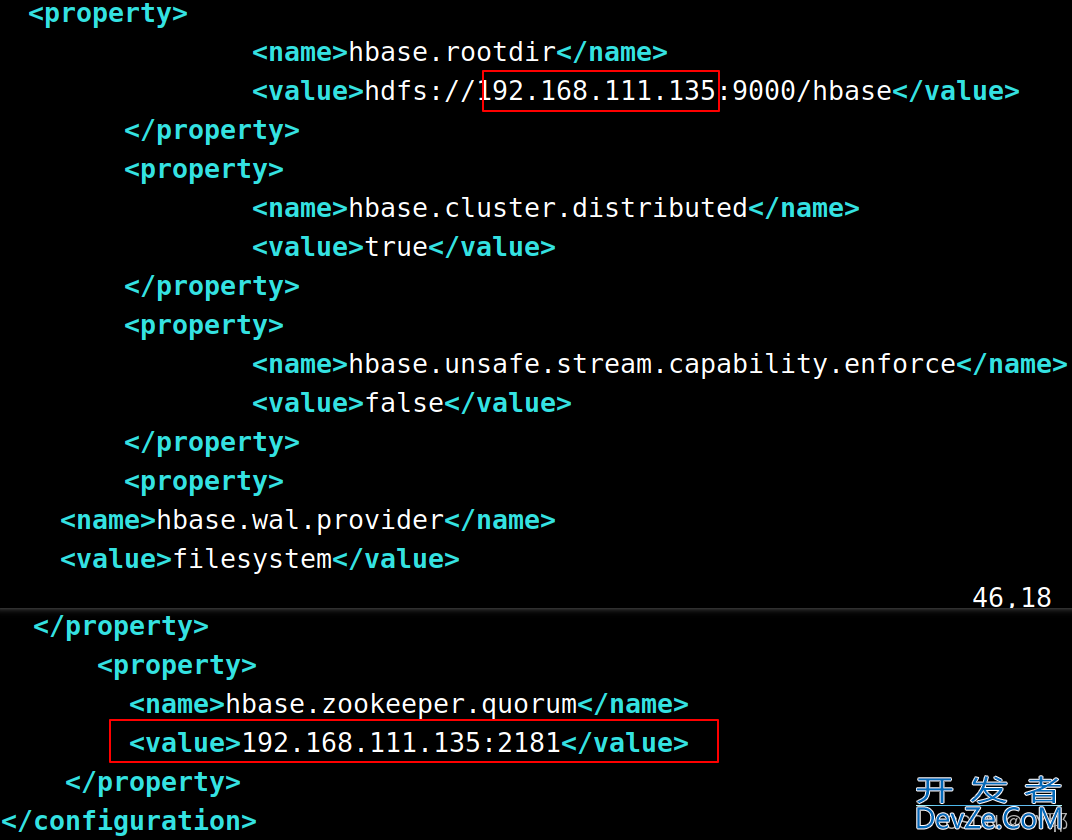

修改Hbase的hbase-site.xml

将IP修改成自己的IP

# 位置可能不一样,和Hbase安装位置有关 cd /usr/local/hbase vim /usr/local/hbase/conf/hbase-site.xml

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://192.168.111.135:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

<property>

<name>hbase.wal.provider</name>

<value>filesystem</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>192.168.111.135:2181</value>

</property>

<property>

<name>hbase.master.ipc.address</name>

<value>0.0.0.0</value>

</property>

<property>

<name>hbase.regionserver.ipc.address</name>

<value>0.0.0.0</value>

</property>

</configuration>

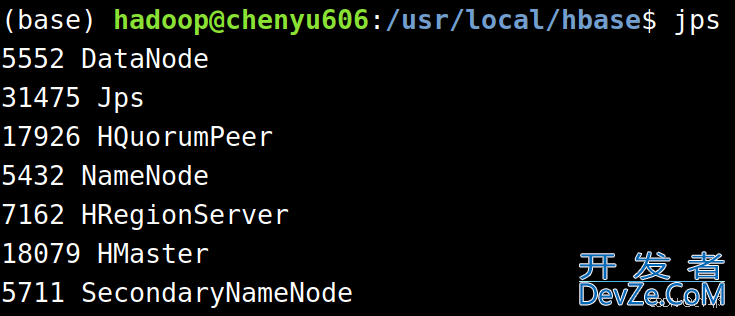

打开Hadoop和Hbase

cd /usr/local/hadoop ./sbin/start-dfs.sh cd /usr/local/hbase bin/start-hbase.sh jps

IDEA 连接

创建Maven项目

IDEA自带Maven,如果需要自己安装Maven可以参考安装Maven

创建项目,选择Maven,模板选择第一个maven-archetype-archetype

添加依赖(pom.xml)

记得修改自己hbase的版本,我的是2.5.4

设置好后Reload一下

<dependencies>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>2.5.4</version>

</dependency>

</dependencies>

创建Java文件并运行

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import java.io.IOException;

public class Test01 {

public static Configuration configuration;

public static Co编程nnection connection;

public static Admin admin;

public static void init(){

System.setProperty("HADOOwww.devze.comP_USER_NAME","hadoop");

configuration = HBaseConfiguration.create();

// IP 需要修改

configuration.set("hbase.zookeeper.quorum", "192.168.111.135");

configuration.set("hbase.zookeeper.property.clientPort", "2181");

// IP 需要修改

configuration.set("hbase.rootdir","hdfs://192.168.111.135:9000/hbase");

try{

编程 connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch (IOException e){

e.printStackTrace();

}

}

/*

* 打印所有表名称

* */

public static void tableListPrint() throws IOException {

TableName[] tableNames = admin.listTableNames();

for(TableName tableName : tableNames){

System.out.println(tableName.getNameAsString());

}

}

public static void close(){

try{

if(admin != null){admin.close();}

if(null != connection){connection.close();}

}catch (IOException e){

e.printStackTrace();

}

}

public static void main(String[] args) throws IOException {

init();

tableListPrint();

close();

}

}

其他

HBase Shell命令

# 进入shell bin/hbase shell # 列出HBase中所有的表 list # 创建一个新表,表名为StudentInfo,包含两个列族Personal和Grades。 create 'StudentInfo', 'Personal', 'Grades' # 向StudentInfo表中插入一条记录,RowKey为2023001,Personal:Name列的值为张三,Grades:Math列的值为90。 put 'StudentInfo','2023001', 'Personal:Name','张三' put 'StudentInfo','2023001', 'Grades:Math', 90 # 查询RowKey为2023001的所有信息。 get 'StudentInfo','2023001' # 修改2023001的Grades:Math列的值为95。 put 'StudentInfo', '2023001', 'Grades:Math', '95' # 删除2023001的Personal:Name列。 delete 'StudentInfo', '2023001', 'Personal:Name' # 扫描StudentInfo表,查看所有记录。 scan 'StudentInfo'

Java API

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

public class Work01 {

public static Configuration configuration;

public static Connection connection;

public static Admin admin;

public static void main(String[] args) throws IOException {

init();

// 删除表 第一次运行请注释

// deleteTable("EmployeeRecords");

tableListPrint();

createTable("EmployeeRecords",new String[]{"Info","Salary"});

tableListPrint();

insertData("EmployeeRecords","606","Info","Name","CY");

insertData("EmployeeRecords","606","Info","Department","现代信息产业学院");

insertData("EmployeeRecords","606","Info","Monthly","50000");

getData("EmployeeRecords","606");

updateData("EmployeeRecords","606","60000");

getData("EmployeeRecords","606");

deleteData("EmployeeRecords","606");

close();

}

public static void init(){

System.setProperty("HADOOP_USER_NAME","hadoop");

configuration = HBaseConfiguration.create();

configuration.set("hbase.zookeeper.quorum", "192.168.111.135");

configuration.set("hbase.zookeeper.property.clientPort", "2181");

configuration.set("hbase.rootdir","hdfs://localhost:9000/hbase");

// 避免乱码问题

configuration.set("hbase.client.encoding.fallback", "UTF-8");

try{

connection = ConnectionFactory.createConnection(configuration);

admin = connection.getAdmin();

}catch (IOException e){

e.printStackTrace();

}

}

/*

* 打印所有表名称

* */

public static void tableListPrint() throws IOException {

TableName[] tableNames = admin.listTableNames();

System.out.print("所有表:");

for(TableName tableName : tableNames){

System.out.pr编程客栈int(tableName.getNameAsString() + "\t");

}

System.out.println();

}

public static void createTable(String myTableName,String[] colFamily) throws IOException {

TableName tableName = TableName.valueOf(myTableName);

if(admin.tableExists(tableName)){

System.out.println("talbe is exists!");

}else {

TableDescriptorBuilder tableDescriptor = TableDescriptorBuilder.newBuilder(tableName);

for(String str:colFamily){

ColumnFamilyDescriptor family =

ColumnFamilyDescriptorBuilder.newBuilder(Bytes.toBytes(str)).build();

tableDescriptor.setColumnFamily(family);

}

admin.createTable(tableDescriptor.build());

}

}

public static void deleteTable(String myTableName) throws IOException {

TableName tableName = TableName.valueOf(myTableName);

admin.disableTable(tableName);

admin.deleteTable(tableName);

}

public static void insertData(String tableName,String rowKey,String colFamily,String col,String val) throws IOException {

Table table = connection.getTable(TableName.valueOf(tableName));

Put put = new Put(rowKey.getBytes());

put.addColumn(colFamily.getBytes(),col.getBytes(), val.getBytes());

table.put(put);

table.close();

}

public static void updateData(String tableName,String rowKey,String val) throws IOException {

Table table = connection.getTable(TableName.valueOf(tableName));

Put put = new Put(rowKey.getBytes());

put.addColumn("Info".getBytes(),"Monthly".getBytes(), val.getBytes());

table.put(put);

table.close();

}

public static void getData(String tableName,String rowKey)throws IOException{

Table table = connection.getTable(TableName.valueOf(tableName));

Get get = new Get(rowKey.getBytes());

Result result = table.get(get);

Cell[] cells = result.rawCells();

System.out.print("行键:"+rowKey);

for (Cell cell : cells) {

//获取列名

String colName = Bytes.toString(cell.getQualifierArray(),cell.getQualifierOffset(),cell.getQualifierLength());

String value = Bytes.toString(cell.getValueArray(), cell.getValueOffset(), cell.getValueLength());

System.out.print("\t"+colName+":"+value);

}

System.out.println();

table.close();

}

public static void deleteData(String tableName,String rowKey)throws IOException{

Table table = connection.getTable(TableName.valueOandroidf(tableName));

Delete delete = new Delete(rowKey.getBytes());

table.delete(delete);

System.out.println("删除成功");

}

public static void close(){

try{

if(admin != null){admin.close();}

if(null != connection){connection.close();}

}catch (IOException e){

e.printStackTrace();

}

}

}

总结

以上为个人经验,希望能给大家一个参考,也希望大家多多支持编程客栈(www.devze.com)。

加载中,请稍侯......

加载中,请稍侯......

精彩评论