C# Onnx CenterNet实现目标检测的示例详解

目录

- 效果

- 模型信息

- 项目

- 代码

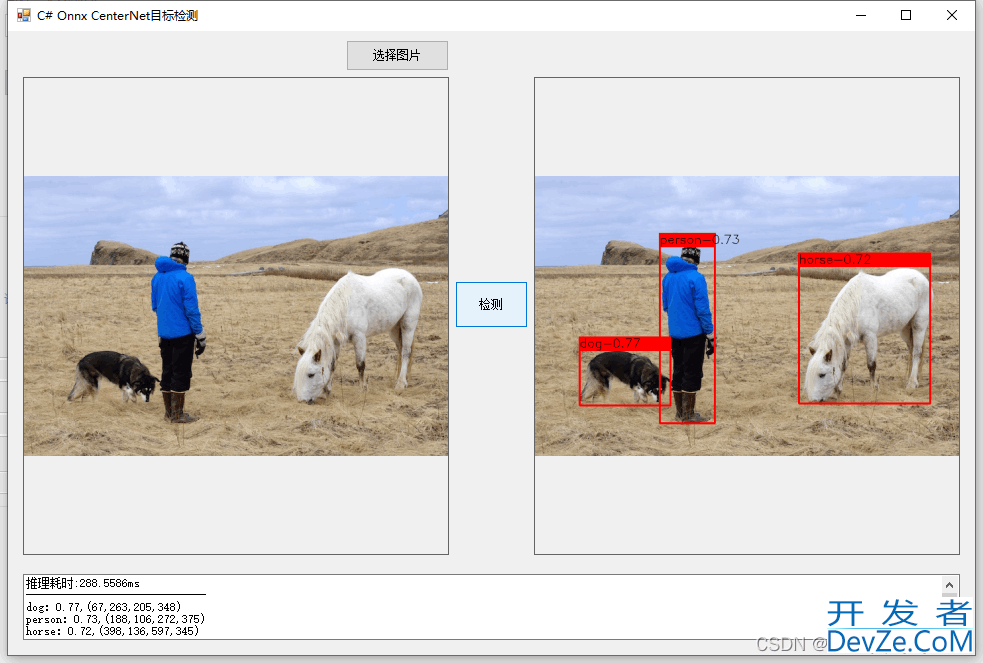

效果

模型信息

Inputs

-------------------------name:input.1tensor:Float[1, 3, 384, 384]---------------------------------------------------------------Outputs-------------------------name:508tensor:Float[1, 80, 96, 96]name:511tensor:Float[1, 2, 96, 96]name:514tensor:Float[1, 2, 96, 96]---------------------------------------------------------------

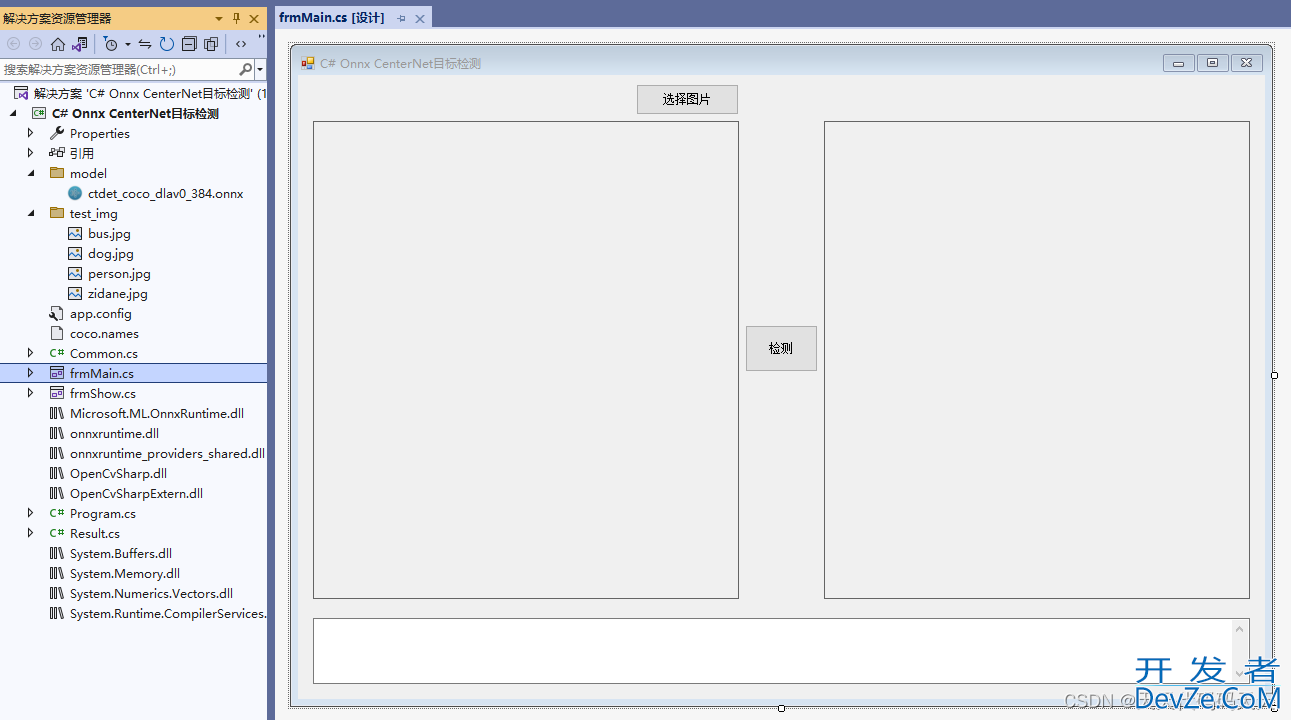

项目

代码

using Microsoft.ML.OnnxRuntime.Tensors;

using Microsoft.ML.OnnxRuntime;

using OpenCvSharp;

using System;

using System.Collections.Generic;

using System.Windows.Forms;

using System.Linq;

using System.Drawing;

using System.IO;

using OpenCvSharp.Dnn;

using System.Text;

using OpenCvSharp.Flann;

namespace Onnx_Demo

{

public partial class frmMain : Form

{

public frmMain()

{

InitializeComponent();

}

string fileFilter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";

string image_path = "";

DateTime dt1 = DateTime.Now;

DateTime dt2 = DateTime.Now;

float confThreshold = 0.4f;

float nmsThreshold = 0.5f;

int inpWidth;

int inpHeight;

Mat image;

string model_path = "";

SessionOptions options;

InferenceSession onnx_session;

Tensor<float> input_tensor;

Tensor<float> input_tensor_scale;

List<NamedOnnxValue> input_container;

IDisposableReadOnlyCollection<DisposableNamedOnnxValue> result_infer;

DisposableNamedOnnxValue[] results_onnxvalue;

List<string> class_names;

int num_class;

StringBuilder sb = new StringBuilder();

float[] mean = { 0.406f, 0.456f, 0.485f };

float[] std = { 0.225f, 0.224f, 0.229f };

int num_grid_y;

int num_grid_x;

float sigmoid(float x)

{

return (float)(1.0 / (1.0 + Math.Exp(-x)));

}

private void button1_Click(object sender, EventArgs e)

{

OpenFileDialog ofd = new OpenFileDialog();

ofd.Filter = fileFilter;

if (ofd.ShowDialog() != DialogResult.OK) return;

pictureBox1.Image = null;

pictureBox2.Image = null;

textBox1.Text = "";

image_path = ofd.FileName;

pictureBox1.Image = new System.Drawing.Bitmap(image_path);

image = new Mat(image_path);

}

private void Form1_Load(object sender, EventArgs e)

{

// 创建输入容器

input_container = new List<NamedOnnxValue>();

// 创建输出会话

options = new SessionOptions();

options.LogSeverityLevel = OrtLoggingLevel.ORT_LOGGING_LEVEL_INFO;

options.AppendExecutionProvider_CPU(0);// 设置为CPU上运行

// 创建推理模型类,读取本地模型文件

model_path = "model/ctdet_coco_dlav0_384.onnx";

inpHeight = 384;

inpWidth = 384;

num_grid_y = 96;

num_grid_x = 96;

onnx_session = new InferenceSession(model_path, options);

// 创建输入容器

input_container = new List<NamedOnnxValue>();

image_path = "test_img/person.jpg";

pictureBox1.Image = new Bitmap(image_path);

class_names = new List<string>();

StreamReader sr = new StreamReader("coco.names");

string line;

while ((line = sr.ReadLine()) != null)

{

class_names.Add(line);

}

num_class = class_names.Count();

}

private unsafe void button2_Click(object sender, EventphpArgs e)

{

if (image_path == "")

{

return;

}

textBox1.Text = "检测中,请稍等……";

pictureBox2.Image = null;

sb.Clear();

System.Windows.Forms.Application.DoEvents();

image =python new Mat(image_path);

//-----------------前处理--------------------------

Mat dstimg = new Mat();

Cv2.CvtColor(image, dstimg, ColorConversionCodes.BGR2RGB);

Cv2.Resize(dstimg, dstimg, new OpenCvSharp.Size(inpWidth, inpHeight));

Mat[] mv = new Mat[3];

Cv2.Split(dstimg, oupythont mv);

for (int i = 0; i < mv.Length; i++)

{

mv[i].ConvertTo(mv[i], MatType.CV_32FC1, 1.0 / (255.0 * std[i]), (0.0 - mean[i]) / std[i]);

}

Cv2.Merge(mv, dstimg);

int row = dstimg.Rows;

int col = dstimg.Cols;

float[] input_tensor_data = new float[1 * 3 * row * col];

for (int c = 0; c < 3; c++)

{

for (int i = 0; i < row; i++)

{

for (int j = 0; j < col; j++)

{

float pix = ((float*)(dstimg.Ptr(i).ToPointer()))[j * 3 + c];

input_tensor_data[c * row * col + i * col + j] = pix;

}

}

}

input_tensor = new DenseTensor<float>(input_tensor_data, new[] { 1, 3, inpHeight, inpWidth });

input_container.Add(NamedOnnxValue.CreateFromTensor("input.1", input_tensor));

//-----------------推理--------------------------

dt1 = DateTime.Now;

result_infer = onnx_session.Run(input_container);//运行 Inference 并获取结果

dt2 = DateTime.Now;

//-----------------后处理--------------------------

results_onnxvalue = result_infer.ToArray();

float ratioh = (float)image.Rows / inpHeight;

float ratiow = (float)image.Cols / inpWidth;

float stride = inpHeight / num_grid_y;

float[] pscore = results_onnxvalue[0].AsTensor<float>().ToArray();

float[] pxy = results_onnxvalue[1].AsTensor<float>().ToArray();

float[] pwh = results_onnxvalue[2].AsTensor<float>().ToArray();

int area = num_grid_y * num_grid_x;

List<float> confidences = new List<float>();

List<Rect> posipythontion_boxes = new List<Rect>();

uTwom List<int> class_ids = new List<int>();

Result result = new Result();

for (int i = 0; i < num_grid_y; i++)

{

for (int j = 0; j < num_grid_x; j++)

{

float max_class_score = -1000;

int class_id = -1;

for (int c = 0; c < num_class; c++)

{

float score = sigmoid(pscore[c * area + i * num_grid_x + j]);

if (score > max_class_score)

{

max_class_score = score;

class_id = c;

}

}

if (max_class_score > confThreshold)

{

float cx = (pxy[i * num_grid_x + j] + j) * stride * ratiow; ///cx

float cy = (pxy[area + i * num_grid_x + j] + i) * stride * ratioh; ///cy

float w = pwh[i * num_grid_x + j] * stride * ratiow; ///w

float h = pwh[area + i * num_grid_x + j] * stride * ratioh; ///h

int x = (int)Math.Max(cx - 0.5 * w, 0);

int y = (int)Math.Max(cy - 0.5 * h, 0);

int width = (int)Math.Min(w, image.Cols - 1);

int height = (int)Math.Min(h, image.Rows - 1);

position_boxes.Add(new Rect(x, y, width, height));

class_ids.Add(class_id);

confidences.Add(max_class_score);

}

}

}

// NMS非极大值抑制

int[] indexes = new int[position_boxes.Count];

CvDnn.NMSBoxes(position_boxes, confidences, confThreshold, nmsThreshold, out indexes);

for (int i = 0; i < indexes.Length; i++)

{

int index = indexes[i];

result.add(confidences[index], position_boxes[index], class_names[class_ids[index]]);

}

if (pictureBox2.Image != null)

{

pictureBox2.Image.Dispose();

}

sb.AppendLine("推理耗时:" + (dt2 - dt1).TotalMilliseconds + "ms");

sb.AppendLine("------------------------------");

// 将识别结果绘制到图片上

Mat result_image = image.Clone();

for (int i = 0; i < result.length; i++)

{

Cv2.Rectangle(result_image, result.rects[i], new Scalar(0, 0, 255), 2, LineTypes.Link8);

Cv2.Rectangle(result_image, new OpenCvSharp.Point(result.rects[i].TopLeft.X - 1, result.rects[i].TopLeft.Y - 20),

new OpenCvSharp.Point(result.rects[i].BottomRight.X, result.rects[i].TopLeft.Y), new Scalar(0, 0, 255), -1);

Cv2.PutText(result_image, result.classes[i] + "-" + result.scores[i].ToString("0.00"),

new OpenCvSharp.Point(result.rects[i].X, result.rects[i].Y - 4),

HersheyFonts.HersheySimplex, 0.6, new Scalar(0, 0, 0), 1);

sb.AppendLine(string.Format("{0}:{1},({2},{3},{4},{5})"

, result.classes[i]

, result.scores[i].ToString("0.00")

, result.rects[i].TopLeft.X

, result.rects[i].TopLeft.Y

, result.rects[i].BottomRight.X

, result.rects[i].BottomRight.Y

));

}

textBox1.Text = sb.ToString();

pictureBox2.Image = new System.Drawing.Bitmap(result_image.ToMemoryStream());

result_image.Dispose();

dstimg.Dispose();

image.Dispose();

}

private void pictureBox2_DoubleClick(object sender, EventArgs e)

{

Common.ShowNormalImg(pictureBox2.Image);

}

private void pictureBox1_DoubleClick(object sender, EventArgs e)

{

Common.ShowNormalImg(pictureBox1.Image);

}

}

}

到此这篇关于C# Onnx CenterNet实现目标检测的示例详解的文章就介绍到这了,更多相关C#目标检测内容请搜索编程客栈(www.devze.com)以前的文章或继续浏览下面的相关文章希望大家以后多多支持编程客栈(www.devze.com)!

加载中,请稍侯......

加载中,请稍侯......

精彩评论