docker部署mysql8之PXC8.0分布式集群过程

目录

- docker部署mysql8之PXC8.0分布式集群

- 环境说明

- 1.创建docker swarm集群

- 1.1 yum安装docker

- 1.2 docker swarm集群部署

- 2.部署PXC集群

- 2.1 创建ca证书

- 2.2 创建cert.cnf文件

- 2.3 创建docker overlay网络

- 2.4 创建容器

- 2.5 高可用测试

- 总结

docker部署mysql8之PXC8.0分布式集群

环境说明

| IP | 主机名 | 节点 | 服务 | 服务类型 |

|---|---|---|---|---|

| 192.168.1.1 | host1 | docker swarm-master | PXC1 | 第一节点(默认为master节点) |

| 192.168.1.2 | host2 | docker swarm-worker1 | PXC2 | 第二节点 |

| 192.168.1.3 | host3 | docker swarm-worker2 | PXC3 | 第三节点 |

1.创建docker swarm集群

部署集群依赖一个overlay网络,实现PXC服务之间跨节点通过主机名通讯,这里直接部署docker swarm,使用docker swarm自带的overlay网络来完成部署。

1.1 yum安装docker

三台主机都需要安装一下

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/Centos/docker-ce.repo

yum makecache

rpm --import https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

yum -y install docker-ce

systemctl enable docker && systemctl start docker

1.2 docker swarm集群部署

1.swarm-master上执行如命令创建 swarm:docker swarm init --advertise-addr {master主机IP}

[root@host1 ~]# docker swarm init --advertise-addr 192.168.1.1

Swarm initialized: current node (l2wbku2vxecmbz8cz94ewjw6d) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-27aqwb4w55kfkcjhwwt1dv0tdf106twja2gu92g6rr9j22bz74-2ld61yjb69uokj3kiwvpfo2il 192.168.1.1:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

[root@host1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

l2wbku2vxecmbz8cz94ewjw6d * host1 Ready Active Leader 19.03.3

注:如果当时没有记录下 docker swarm init 提示的添加 worker 的完整命令,可以通过 docker swarm join-token worker 查看。

2.复制前面的 docker swarm join 命令,在 swarm-worker1 和 swarm-worke编程客栈r2 上执行,将它们添加到 swarm 中。

命令输出如下:

[root@host2 ~]# docker swarm join --tok编程客栈en SWMTKN-1-27aqwb4w55kfkcjhwwt1dv0tdf106twja2gu92g6rr9j22bz74-2ld61yjb69uokj3kiwvpfo2il 192.168.1.1:2377

This node joined a swarm as a worker.

[root@host3 ~]# docker swarm join --token SWMTKN-1-27aqwb4w55kfkcjhwwt1dv0tdf106twja2gu92g6rr9j22bz74-2ld61yjb69uokj3kiwvpfo2il 192.168.1.1:2377

This node joined a swarm as a worker.

3.swarm-master上docker node ls 可以看到两个 worker node 已经添加进来了。

[root@host1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

ju6g4tkd8vxgnrw5zvmwmlevw host2 Ready Active 19.03.3

asrqc03tcl4bvxzdxgfs5h60q host3 Ready Active 19.03.3

l2wbku2vxecmbz8cz94ewjw6d * host1 Ready Active Leader 19.03.3

2.部署PXC集群

2.1 创建ca证书

swarm-master上执行以下命令:

1. mkdir -p /data/ssl && cd /data/ssl && openssl genrsa 2048 > ca-key.pem

2. openssl req -new -x509 -nodes -days 3600 \

-key ca-key.pem -out ca.pem

3. openssl req -newkey rsa:2048 -days 3600 \

-nodes -keyoandroidut server-key.pem -out server-req.pem

4. openssl rsa -in server-key.pem -out server-key.pem

5. openssl x509 -req -in server-req.pem -days 3600 \

-CA ca.pem -CAkey ca-key.pem -set_serial 01 \

-out server-cert.pem

6. openssl req -newkey rsa:2048 -days 3600 \

-nodes -keyout client-key.pem -out client-req.pem

7. openssl rsa -in client-key.pem -out client-key.pem

8. openssl x509 -req -in client-req.pem -days 3600 \

-CA ca.pem -CAkey ca-key.pem -set_serial 01 \

-out client-cert.pem

9. openssl verify -CAfile ca.pem server-cert.pem client-cert.pem (出现两个OK即可)

server-cert.pem: OK

client-cert.pem: OK

问题:第9步报错为error 18 at 0 depth lookup:self signed certificate

解决方案:当你在创建ca.csr, server.csr, client.csr时 要求你填写的 Common Name。

ca.csr 的common name 不能和server.csr, client.csr填的相同

将目录/data/ssl下所有东西拷贝至 swarm-worker1 和 swarm-worker2的相同目录下

scp * 192.168.1.2:`pwd`/ scp * 192.168.1.3:`pwd`/

2.2 创建cert.cnf文件

三台机器同时操作:

mkdir -p /data/ssl/cert && vi /data/ssl/cert/cert.cnf [mysqld] skip-name-resolve ssl-ca = /cert/ca.pem ssl-cert = /cert/server-cert.pem ssl-key = /cert/server-key.pem [client] ssl-ca = /cert/ca.pem ssl-cert = /cert/client-cert.pem ssl-key = /cert/client-key.pem [sst] encrypt = 4 ssl-ca = /cert/ca.pem ssl-cert = /cert/server-cert.pem ssl-key = /cert/server-key.pem

2.3 创建docker overlay网络

swarm-master上执行以下命令:

docker network create -d overlay --attachable swarm_mysql

2.4 创建容器

- 2.4.1 初始化集群,创建第一个节点

swarm-master上执行以下命令:

docker network create -d overlay --attachable swarm_mysql docker run -d --name=pn1 \ --net=swarm_mysql \ --restart=always \ -p 9001:3306 \ --privileged \ -e TZ=Asia/Shanghai \ -e MYSQL_ROOT_PASSWORD=mT123456 \ -e CLUSTER_NAME=PXC1 \ -v /data/ssl:/cert \ -v mysql:/var/lib/mysql/ \ -v /data/ssl/cert:/etc/percona-xtradb-cluster.conf.d \ percona/percona-xtradb-cluster:8.0

- 2.4.2 创建第二个节点

swarm-worker1 执行一下命令:

docker run -d --name=pn2 \ --net=swarm_mysql \ --restart=always \ -p 9001:3306 \ --privileged \ -e TZ=Asia/Shanghai \ -e MYSQL_ROOT_PASSWORD=mT123456 \ -e CLUSTER_NAME=PXC1 \ -e CLUSTER_JOIN=pn1 \ -v /data/ssl:/cert \ -v mysql:/var/lib/mysql/ \ -v /data/ssl/cert:/etc/percona-xtradb-cluster.conf.d \ percona/percona-xtradb-cluster:8.0

- 2.4.3 创建第三个节点

swarm-worker2执行一下命令:

docker run -d --name=pn3 \ --net=swarm_mysql \ --restart=always \ -p 9001:3306 \ --privileged \ -e TZ=Asia/Shanghai \ -e MYSQL_ROOT_PASSWORD=mT123456 \ -e CLUSTER_NAME=PXC1 \ -e CLUSTER_JOIN=pn1 \ -v /data/ssl:/cert \ -v mysql:/var/lib/mysql/ \ -v /data/ssl/cert:/etc/percona-xtradb-cluster.conf.d \ percona/percona-xtradb-cluster:8.0

- 2.4.4 检查集群的状态

swarm-master上执行一下命令,检查集群的状态 docker exec -it pn1 /usr/bin/mysql -uroot -p Enter password: Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 19 Server version: 8.0.25-15.1 Percona XtraDB Cluster (GPL), Release rel15, Revision 8638bb0, WSREP version 26.4.3 Copyright (c) 2009-2021 Percona LLC and/or its affiliates Copyright (c) 2000, 2021, oracle and/or its affiliates. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. You are enforcing ssl connection via Unix socket. Please consider switching ssl off as it does not make connection via unix socket any more secure. mysql> show status like 'wsrep%'; +------------------------------+-------------------------------------------------+ | Variable_name | Value | +------------------------------+-------------------------------------------------+ | wsrep_local_state_uuid | 625318e2-9e1c-11e7-9d07-aee70d98d8ac | ... | wsrep_local_state_comment为Synced, | ... | wsrep_incoming_addresses | ce9ffe81e7cb:3306,89ba74cac488:3306,0e35f30ba764:3306 | ... | wsrep_cluster_conf_id | 3 编程 | | wsrep_cluster_size | 3 编程客栈 | | wsrep_cluster_state_uuid | 625318e2-9e1c-11e7-9d07-aee70d98d8ac | | wsrep_cluster_status | Primary | | wsrep_connected | ON | ... | wsrep_ready | ON | +------------------------------+-------------------------------------------------+ 59 rows in set (0.02 sec) #wsrep_local_state_comment为Synced,wsrep_incoming_addresses为三个,wsrep_connected状态为ON, wsrep_ready为ON即为正常

2.5 高可用测试

- 2.5.1 非master重启

当pn1(master)正常运行的时候,pn2,pn3模拟重启没问题,测试步骤省略,主要测试重启后所有节点能否正常同步数据

docker restart pn2 或者 docker restart pn3

- 2.5.2 master节点重启

docker restart pn1

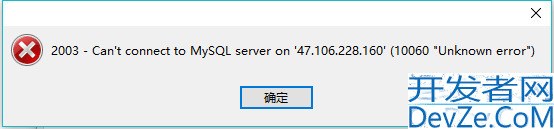

重启pn1(master)主节点模拟重启后,无法正常启动,需要以成员方式重加集群,重建命令如下:

docker run -d --name=pn1 \ --net=swarm_mysql \ --restart=always \ -p 9001:3306 \ --privileged \ -e TZ=Asia/Shanghai \ -e MYSQL_ROOT_PASSWORD=mT123456 \ -e CLUSTER_NAME=PXC1 \ -e CLUSTER_JOIN=pn2 \ -v /data/ssl:/cert \ -v mysql:/var/lib/mysql/ \ -v /data/ssl/cert:/etc/percona-xtradb-cluster.conf.d \ percona/percona-xtradb-cluster:8.0

总结

以上为个人经验,希望能给大家一个参考,也希望大家多多支持编程客栈(www.devze.com)。

加载中,请稍侯......

加载中,请稍侯......

精彩评论