MySQL Router高可用搭建问题处理小结

目录

- 一、安装简介

- 1.1 安装目的

- 1.2 mysql router高可用组件介绍

- 1.2.1 corosync

- 1.2.2 pacemaker

- 1.2.3 ldirectord

- 二、高可用搭建

- 2.1 基础环境设置(三台服务器都做)

- 2.2 通过MySQL Router搭建读写分离MGR集群

- 2.3 在三台服务器上分别进行进行MySQL Router部署并启动,MySQL Router配置文件如下

- 2.4 验证三台MySQL Router连接测试

- 2.5 安装pacemaker安装pacemaker

- 2.6 安装ldirectord(三台都做)

- 2.7 配置回环网卡上配置VIP(三台都做)

- 2.8 集群资源添加(任意节点执行即可)

- 2.9 集群启停

- 三、高可用及负载均衡测试

- 四、问题处理pcs

安装简介高可用搭建高可用及负载均衡测试问题处理

一、安装简介

1.1 安装目的

MySQL官方提供了InnoDB Cluster,该集群由MySQL MGR和MySQL Router组成。MySQL MGR在数据库层面实现自主高可用性,而MySQL Router则负责代理访问。在部署完成后,MySQL Router将形成单点,如果出现故障,将会影响数据库集群的可用性。因此,为了提高数据库系统的可用性,需要搭建MySQL Router的高可用性方案。

1.2 MySQL router高可用组件介绍

本篇文章中的高可用方案,主要是通过Corosync和Pacemaker是两个开源软件项目实现,它们结合起来为高可用性集群提供了通信、同步、资源管理和故障转移等服务。

1.2.1 corosync

Corosync是一个开源的高可用性集群通信和同步服务,可以实现集群节点之间的通信和数据同步,同时提供了可靠的消息传递机制和成员管理功能,以确保在分布式环境下集群的稳定运行。 Corosync基于可靠的UDP多播协议进行通信,并提供了可插拔的协议栈接口,可以支持多种协议和网络环境。它还提供了一个API,可以让其他应用程序使用Corosync的通信和同步服务。

1.2.2 pacemaker

Pacemaker是一个开源的高可用性集群资源管理和故障转移工具,可以实现在集群节点之间自动管理资源(如虚拟IP、文件系统、数据库等),并在节点或资源故障时进行自动迁移,从而确保整个系统的高可用性和连续性。 Pacemaker支持多种资源管理策略,可以根据不同的需求进行配置。它还提供了一个灵活的插件框架,可以支持不同的集群环境和应用场景,比如虚拟化、云计算等。

将Corosync和Pacemaker结合起来,可以提供一个完整的高可用性集群解决方案。它通过Corosync实现集群节点之间的通信和同步,通过Pacemaker实现集群资源管理和故障转移,从而确保整个系统的高可用性和连续性。 它们结合起来为高可用性集群提供了可靠的通信、同步、资源管理和故障转移等服务,是构建可靠、高效的分布式系统的重要基础。

1.2.3 ldirectord

ldirectord是一个用于linux系统的负载均衡工具,它可以管理多个服务器上的服务,并将客户端请求分发到这些服务器中的一个或多个上,以提高服务的可用性和性能。ldirectord通常是与Heartbeat或Keepalived等集群软件一起使用,以确保高可用性和负载均衡。 ldirectord主要用途包括:

- 负载均衡:ldirectord可以基于不同的负载均衡算法进行请求分发,例如轮询、加权轮询、最少连接、源地址哈希等。它可以将客户端请求分发到多个后端服务器中的一个或多个上,从而实现负载均衡。

- 健康检查:ldirectord可以定期检查后端服务器的可用性,并将不可用的服务器从服务池中排除,从而确保服务的高可用性和稳定性。

- 会话保持:ldirectord可以根据客户端的IP地址、Cookie等标识,将客户端请求路由到相同的后端服务器上,从而实现会话保持,确保客户端与后端服务器之间的连接不会被中断。

- 动态配置:ldirectord支持动态添加、删除、修改后端服务器和服务,管理员可以通过命令行或配置文件等方式进行操作,从而实现动态配置。

ldirectord是专门为LVS监控而编写的,用来监控lvs架构中服务器池(server pool) 的服务器状态。 ldirectord 运行在 IPVS 节点上, ldirectord作为一个守护进程启动后会对服务器池中的每个真实服务器发送请求进行监控,如果服务器没有响应 ldirectord 的请求,那么ldirectord 认为该服务器不可用, ldirectord 会运行 ipvsadm 对 IPVS表中该服务器进行删除,如果等下次再次检测有相应则通过ipvsadm 进行添加。

安装规划

MySQL及MySQL Router版本均为8.0.32

| IP | 主机名 | 安装组件 | 使用端口 |

|---|---|---|---|

| 172.17.140.25 | gdb1 | MySQL MySQL Router ipvsadm ldirectord pcs pacemaker corosync | MySQL:3309 MySQL Router:6446 MySQL Router:6447 pcs_tcp:13314 pcs_udp:13315 |

| 172.17.140.24 | gDB2 | MySQL MySQL Router ipvsadm ldirectord pcs pacemaker corosync | MySQL:3309 MySQL Router:6446 MySQL Router:6447 pcs_tcp:13314 pcs_udp:13315 |

| 172.17.139.164 | gdb3 | MySQL MySQL Router ipvsadm ldirectord pcs pacemaker corosync | MySQL:3309 MySQL Router:6446 MySQL Router:6447 pcs_tcp:13314 pcs_udp:13315 |

| 172.17.129.1 | VIP | 6446、6447 | |

| 172.17.139.62 | MySQL client |

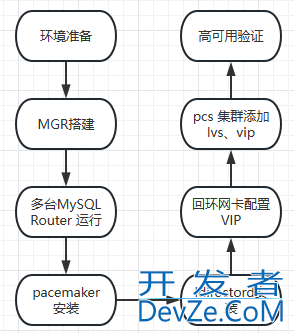

大概安装步骤如下

二、高可用搭建

2.1 基础环境设置(三台服务器都做)

分别在三台服务器上根据规划设置主机名

hostnamectl set-hostname gdb1

hostnamectl set-hostname gdb2hostnamectl set-hostname gdb3

将下面内容追加保存在三台服务器的文件/etc/hosts中

172.17.140.25 gdb1

172.17.140.24 gdb2172.17.139.164 gdb3

在三台服务器上禁用防火墙

systemctl stop firewalld

systemctl disable firewalld

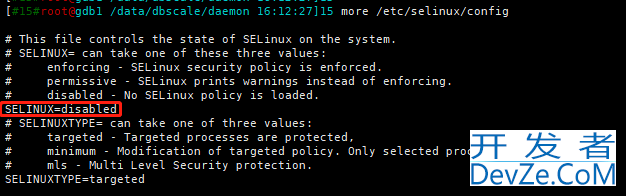

在三台服务器上禁用selinux,如果selinux未关闭,修改配置文件后,需要重启服务器才会生效

如下输出表示完成关闭

在三台服务器上分www.devze.com别执行下面命令,用户建立互相

建立互信,仅仅是为了服务器间传输文件方便,不是集群搭建的必要基础。

ssh-keygen -t dsa ssh-copy-id gdb1 ssh-copy-id gdb2 ssh-copy-id gdb3

执行情况如下

[#19#root@gdb1 ~ 16:16:54]19 ssh-keygen -t dsa Generating public/private dsa key pair. Enter file in which to save the key (/root/.ssh/id_dsa): ## 直接回车 /root/.ssh/id_dsa already exists. Overwrite (y/n)? y ## 如果原来有ssh配置文件,可以输入y覆盖 Enter passphrase (empty for no passphrase): ## 直接回车 Enter same passphrase again: ## 直接回车 Your identification has been saved in /root/.ssh/id_dsa. Your public key has been saved in /root/.ssh/id_dsa.pub. The key fingerprint is: SHA256:qwJXgfN13+N1U5qvn9fC8pyhA29iuXvQVhCupExzgTc root@gdb1 The key's randomart image is: +---[DSA 1024]----+ | . .. .. | | o . o Eo. .| | o ooooo.o o.| | oo = .. *.o| | . S .. o +o| | . . .o o . .| | o . * ....| | . . + *o+o+| | .. .o*.+++o| +----[SHA256]-----+ [#20#root@gdb1 ~ 16:17:08]20 ssh-copy-id gdb1 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_dsa.pub" /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@gdb1's password: ## 输入gdb1服务器的root用户对应密码 Number of key(s) added: 1 Now try logging into the MAChine, with: "ssh 'gdb1'" and check to make sure that only the key(s) you wanted were added. [#21#root@gdb1 ~ 16:17:22]21 ssh-copy-id gdb2 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_dsa.pub" /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@gdb2's password: ## 输入gdb2服务器的root用户对应密码 Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'gdb2'" and check to make sure that only the key(s) you wanted were added. [#22#root@gdb1 ~ 16:17:41]22 ssh-copy-id gdb3 /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_dsa.pub" /usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys root@gdb3's password: ## 输入gdb3服务器的root用户对应密码 Number of key(s) added: 1 Now try logging into the machine, with: "ssh 'gdb3'" and check to make sure that only the key(s) you wanted were added. [#23#root@gdb1 ~ 16:17:44]23

任意切换服务器,不需要输入密码,则说明互相建立成功

[#24#root@gdb1 ~ 16:21:16]24 ssh gdb1 Last login: Tue Feb 21 16:21:05 2023 from 172.17.140.25 [#1#root@gdb1 ~ 16:21:19]1 logout Connection to gdb1 closed. [#25#root@gdb1 ~ 16:21:19]25 ssh gdb2 Last login: Tue Feb 21 16:21:09 2023 from 172.17.140.25 [#1#root@gdb2 ~ 16:21:21]1 logout Connection to gdb2 closed. [#26#root@gdb1 ~ 16:21:21]26 ssh gdb3 Last login: Tue Feb 21 10:53:47 2023 [#1#root@gdb3 ~ 16:21:22]1 logout Connection to gdb3 closed. [#27#root@gdb1 ~ 16:21:24]27

时钟同步,对于分布式、集中式集群,时钟同步都非常重要,时间不一致会引发各种异常情况

yum -y install ntpdate // 安装ntpdate客户端 ntpdate npt1.aliyun.com // 如果连通外网,可以指定阿里云ntp服务器,或者指定内网ntp server hwclock -w // 更新BIOS时间

2.2 通过MySQL Router搭建读写分离MGR集群

具体参考文章https://gitee.com/GreatSQL/GreatSQL-Doc/blob/master/deep-dive-mgr/deep-dive-mgr-07.md

2.3 在三台服务器上分别进行进行MySQL Router部署并启动,MySQL Router配置文件如下

# File automatically generated during MySQL Router bootstrap [DEFAULT] name=system user=root keyring_path=/opt/software/mysql-router-8.0.32-linux-glibc2.17-x86_64-minimal/var/lib/mysqlrouter/keyring master_key_path=/opt/software/mysql-router-8.0.32-linux-glibc2.17-x86_64-minimal/mysqlrouter.key connect_timeout=5 read_timeout=30 dynamic_state=/opt/software/mysql-router-8.0.32-linux-glibc2.17-x86_64-minimal/bin/../var/lib/mysqlrouter/state.json client_ssl_cert=/opt/software/mysql-router-8.0.32-linux-glibc2.17-x86_64-minimal/var/lib/mysqlrouter/router-cert.pem client_ssl_key=/opt/software/mysql-router-8.0.32-linux-glibc2.17-x86_64-minimal/var/lib/mysqlrouter/router-key.pem client_ssl_mode=DISABLED server_ssl_mode=AS_CLIENT server_ssl_verify=DISABLED unknown_config_option=error [logger] level=INFO [metadata_cache:bootstrap] cluster_type=gr router_id=1 user=mysql_router1_g9c62rk29lcn metadata_cluster=gdbCluster ttl=0.5 auth_cache_ttl=-1 auth_cache_refresh_interval=2 use_gr_notifications=0 [routing:bootstrap_rw] bind_address=0.0.0.0 bind_port=6446 destinations=metadata-cache://gdbCluster/?role=PRIMARY routing_strategy=first-available protocol=classic [routing:bootstrap_ro] bind_address=0.0.0.0 bind_port=6447 destinations=metadata-cache://gdbCluster/?role=SECONDARY routing_strategy=round-robin-with-fallback protocol=classic [routing:bootstrap_x_rw] bind_address=0.0.0.0 bind_port=6448 destinations=metadata-cache://gdbCluster/?role=PRIMARY routing_strategy=first-available protocol=x [routing:bootstrap_x_ro] bind_address=0.0.0.0 bind_port=6449 destinations=metadata-cache://gdbCluster/?role=SECONDARY routing_strategy=round-robin-with-fallback protocol=x [http_server] port=8443 ssl=1 ssl_cert=/opt/software/mysql-router-8.0.32-linux-glibc2.17-x86_64-minimal/var/lib/mysqlrouter/router-cert.pem ssl_key=/opt/software/mysql-router-8.0.32-linux-glibc2.17-x86_64-minimal/var/lib/mysqlrouter/router-key.pem [http_auth_realm:default_auth_realm] backend=default_auth_backend method=basic name=default_realm [rest_router] require_realm=default_auth_realm [rest_api] [http_auth_backend:default_auth_backend] backend=metadata_cache [rest_routing] require_realm=javascriptdefault_auth_realm [rest_metadata_cache] require_realm=default_auth_realm

2.4 验证三台MySQL Router连接测试

[#12#root@gdb2 ~ 14:12:45]12 mysql -uroot -pAbc1234567* -h172.17.140.25 -P6446 -N -e 'select now()' 2> /dev/null +---------------------+ | 2023-03-17 14:12:46 | +---------------------+ [#13#root@gdb2 ~ 14:12:46]13 mysql -uroot -pAbc1234567* -h172.17.140.25 -P6447 -N -e 'select now()' 2> /dev/null +---------------------+ | 2023-03-17 14:12:49 | +---------------------+ [#14#root@gdb2 ~ 14:12:49]14 mysql -uroot -pAbc1234567* -h172.17.140.24 -P6446 -N -e 'select now()' 2> /dev/null +---------------------+ | 2023-03-17 14:12:52 | +---------------------+ [#15#root@gdb2 ~ 14:12:52]15 mysql -uroot -pAbc1234567* -h172.17.140.24 -P6447 -N -e 'select now()' 2> /dev/null +---------------------+ | 2023-03-17 14:12:55 | +---------------------+ [#16#root@gdb2 ~ 14:12:55]16 mysql -uroot -pAbc1234567* -h172.17.139.164 -P6446 -N -e 'select now()' 2> /dev/null +---------------------+ | 2023-03-17 14:12:58 | +---------------------+ [#17#root@gdb2 ~ 14:12:58]17 mysql -uroot -pAbc1234567* -h172.17.139.164 -P6447 -N -e 'select now()' 2> /dev/null +---------------------+ | 2023-03-17 14:13:01 | +---------------------+ [#18#root@gdb2 ~ 14:13:01]18

2.5 安装pacemaker安装pacemaker

安装pacemaker会依赖corosync这个包,所以直接安装pacemaker这一个包就可以了

[#1#root@gdb1 ~ 10:05:55]1 yum -y install pacemaker

安装pcs管理工具编程

[#1#root@gdb1 ~ 10:05:55]1 yum -y install pcs

创建集群认证操作系统用户,用户名为hacluster,密码设置为abc123

[#13#root@gdb1 ~ 10:54:13]13 echo abc123 | passwd --stdin hacluster 更改用户 hacluster 的密码 。 passwd:所有的身份验证令牌已经成功更新。

启动pcsd,并且设置开机自启动

[#16#root@gdb1 ~ 10:55:30]16 systemctl enable pcsd

Created symlink from /etc/systemd/system/multi-user.target.wants/pcsd.service to /usr/lib/systemd/system/pcsd.service.

[#17#root@gdb1 ~ 10:56:03]17 systemctl start pcsd

[#18#root@gdb1 ~ 10:56:08]18 systemctl status pcsd

● pcsd.service - PCS GUI and remote configuration interface

Loaded: loaded (/usr/lib/systemd/system/pcsd.service; enabled; vendor preset: disabled)

Active: active (running) since 三 2023-02-22 10:56:08 CST; 6s ago

Docs: man:pcsd(8)

man:pcs(8)

Main PID: 27677 (pcsd)

Tasks: 4

Memory: 29.9M

CGroup: /system.slice/pcsd.service

└─27677 /usr/bin/ruby /usr/lib/pcsd/pcsd

2月 22 10:56:07 gdb1 systemd[1]: Starting PCS GUI and remote configuration interface...

2月 22 10:56:08 gdb1 systemd[1]: Started PCS GUI and remote configuration interface.

[#19#root@gdb1 ~ 10:56:14]19

修改pcsd的TCP端口为指定的13314

sed -i '/#PCSD_PORT=2224/a\ PCSD_PORT=13314' /etc/sysconfig/pcsd

重启pcsd服务,让新端口生效

[#23#root@gdb1 ~ 11:23:20]23 systemctl jshnLeurestart pcsd

[#24#root@gdb1 ~ 11:23:39]24 systemctl status pcsd

● pcsd.service - PCS GUI and remote configuration interface

Loaded: loaded (/usr/lib/systemd/system/pcsd.service; enabled; vendor preset: disabled)

Active: active (running) since 三 2023-02-22 11:23:39 CST; 5s ago

Docs: man:pcsd(8)

man:pcs(8)

Main PID: 30041 (pcsd)

Tasks: 4

Memory: 27.3M

CGroup: /system.slice/pcsd.service

└─30041 /usr/bin/ruby /usr/lib/pcsd/pcsd

2月 22 11:23:38 gdb1 systemd[1]: Starting PCS GUI and remote configuration interface...

2月 22 11:23:39 gdb1 systemd[1]: Started PCS GUI and remote configuration interface.

[#25#root@gdb1 ~ 11:23:45]25

设置集群认证信息,通过操作系统用户hacluster进行认证

[#27#root@gdb1 ~ 11:31:43]27 cp /etc/corosync/corosync.conf.example /etc/corosync/corosync.conf [#28#root@gdb1 ~ 11:32:15]28 pcs cluster auth gdb1:13314 gdb2:13314 gdb3:13314 -u hacluster -p 'abc123' gdb1: Authorized gdb2: Authorized gdb3: Authorized [#29#root@gdb1 ~ 11:33:18]29

创建集群,任意节点执行即可

## 名称为gdb_ha , udp协议为13315, 掩码为24 ,集群成员为主机gdb1, gdb2, gdb3 [#31#root@gdb1 ~ 11:41:48]31 pcs cluster setup --force --name gdb_ha --transport=udp --addr0 24 --mcastport0 13315 gdb1 gdb2 gdb3 Destroying cluster on nodes: gdb1, gdb2, gdb3... gdb1: Stopping Cluster (pacemaker)... gdb2: Stopping Cluster (pacemaker)... gdb3: Stopping Cluster (pacemaker)... gdb2: Successfully destroyed cluster gdb1: Successfully destroyed cluster gdb3: Successfully destroyed cluster Sending 'pacemaker_remote authkey' to 'gdb1', 'gdb2', 'gdb3' gdb2: successful distribution of the file 'pacemaker_remote authkey' gdb3: successful distribution of the file 'pacemaker_remote authkey' gdb1: successful distribution of the file 'pacemaker_remote authkey' Sending cluster config files to the nodes... gdb1: Succeeded gdb2: Succeeded gdb3: Succeeded Synchronizing pcsd certificates on nodes gdb1, gdb2, gdb3... gdb1: Success gdb2: Success gdb3: Success Restarting pcsd on the nodes in order to reload the certificates... gdb1: Success gdb2: Success gdb3: Success

确认完整的集群配置,在任意节点查看即可

[#21#root@gdb2 ~ 11:33:18]21 more /etc/corosync/corosync.conf

totem {

version: 2

cluster_name: gdb_ha

secauth: off

transport: udp

rrp_mode: passive

interface {

ringnumber: 0

bindnetaddr: 24

mcastaddr: 239.255.1.1

mcastport: 13315

}

}

nodelist {

node {

ring0_addr: gdb1

nodeid: 1

}

node {

ring0_addr: gdb2

nodeid: 2

}

node {

ring0_addr: gdb3

nodeid: 3

}

}

quorum {

provider: corosync_votequorum

}

logging {

to_logfile: yes

logfile: /var/log/cluster/corosync.log

to_syslog: yes

}

[#22#root@gdb2 ~ 14:23:50]22

启动所有集群节点的pacemaker 相关服务,任意节点执行即可

[#35#root@gdb1 ~ 15:30:51]35 pcs cluster start --all gdb1: Starting Cluster (corosync)... gdb2: Starting Cluster (corosync)... gdb3: Starting Cluster (corosync)... gdb3: Starting Cluster (pacemaker)... gdb1: Starting Cluster (pacemaker)... gdb2: Starting Cluster (pacemaker)...

关闭服务时,使用pcs cluster stop --all,或者用pcs cluster stop 《server》关闭某一台

在每个节点上设置pacemaker相关服务开机自启动

[#35#root@gdb1 ~ 15:30:51]35 systemctl enable pcsd corosync pacemaker [#36#root@gdb1 ~ 15:30:53]36 pcs cluster enable --all

没有STONITH 设备时,禁用STONITH 组件功能

禁用STONITH 组件功能后,分布式锁管理器DLM等资源以及依赖DLM的所有服务:例如cLVM2,GFS2,OCFS2等都将无法启动,不禁用时会有错误信息

pcs property set stonith-enabled=false

完整的命令执行过程如下

[#32#root@gdb1 ~ 15:48:20]32 systemctl status pacemaker

● pacemaker.service - Pacemaker High Availability Cluster Manager

Loaded: loaded (/usr/lib/systemd/system/pacemaker.service; disabled; vendor preset: disabled)

Active: active (running) since 三 2023-02-22 15:35:48 CST; 1min 54s ago

Docs: man:pacemakerd

https://clusterlabs.org/pacemaker/doc/en-US/Pacemaker/1.1/html-single/Pacemaker_Explained/index.html

Main PID: 25661 (pacemakerd)

Tasks: 7

Memory: 51.1M

CGroup: /system.slice/pacemaker.service

├─25661 /usr/sbin/pacemakerd -f

├─25662 /usr/libexec/pacemaker/cib

├─25663 /usr/libexec/pacemaker/stonithd

├─25664 /usr/libexec/pacemaker/lrmd

├─25665 /usr/libexec/pacemaker/attrd

├─25666 /usr/libexec/pacemaker/pengine

└─25667 /usr/libexec/pacemaker/crmd

2月 22 15:35:52 gdb1 crmd[25667]: notice: Fencer successfully connected

2月 22 15:36:11 gdb1 crmd[25667]: notice: State transition S_ELECTION -> S_INTEGRATION

2月 22 15:36:12 gdb1 pengine[25666]: error: Resource start-up disabled since no STONITH resources have been defined

2月 22 15:36:12 gdb1 pengine[25666]: error: Either configure some or disable STONITH with the stonith-enabled option

2月 22 15:36:12 gdb1 pengine[25666]: error: NOTE: Clusters with shared data need STONITH to ensure data integrity

2月 22 15:36:12 gdb1 pengine[25666]: notice: Delaying fencing operations until there are resources to manage

2月 22 15:36:12 gdb1 pengine[25666]: notice: Calculated transition 0, saving inputs in /var/lib/pacemaker/pengine/pe-input-0.bz2

2月 22 15:36:12 gdb1 pengine[25666]: notice: Configuration ERRORs found during PE processing. Please run "crm_verify -L" to identify issues.

2月 22 15:36:12 gdb1 crmd[25667]: notice: Transition 0 (Complete=0, Pending=0, Fired=0, Skipped=0, Incomplete=0, Source=/var/lib/pacemaker/pengine/pe-input-0.bz2): Complete

2月 22 15:36:12 gdb1 crmd[25667]: notice: State transition S_TRANSITION_ENGINE -> S_IDLE

[#33#root@gdb1 ~ 15:37:43]33 pcs property set stonith-enabled=false

[#34#root@gdb1 ~ 15:48:20]34 systemctl status pacemaker

● pacemaker.service - Pacemaker High Availability Cluster Manager

Loaded: loaded (/usr/lib/systemd/system/pacemaker.service; disabled; vendor preset: disabled)

Active: active (running) since 三 2023-02-22 15:35:48 CST; 12min ago

Docs: man:pacemakerd

https://clusterlabs.org/pacemaker/doc/en-US/Pacemaker/1.1/html-single/Pacemaker_Explained/index.html

Main PID: 25661 (pacemakerd)

Tasks: 7

Memory: 51.7M

CGroup: /system.slice/pacemaker.service

├─25661 /usr/sbin/pacemakerd -f

├─25662 /usr/libexec/pacemaker/cib

├─25663 /usr/libexec/pacemaker/stonithd

├─25664 /usr/libexec/pacemaker/lrmd

├─25665 /usr/libexec/pacemaker/attrd

├─25666 /usr/libexec/pacemaker/pengine

└─25667 /usr/libexec/pacemaker/crmd

2月 22 15:36:12 gdb1 pengine[25666]: notice: Calculated transition 0, saving inputs in /var/lib/pacemaker/pengine/pe-input-0.bz2

2月 22 15:36:12 gdb1 pengine[25666]: notice: Configuration ERRORs found during PE processing. Please run "crm_verify -L" to identify issues.

2月 22 15:36:12 gdb1 crmd[25667]: notice: Transition 0 (Complete=0, Pending=0, Fired=0, Skipped=0, Incomplete=0, Source=/var/lib/pacemaker/pengine/pe-input-0.bz2): Complete

2月 22 15:36:12 gdb1 crmd[25667]: notice: State transition S_TRANSITION_ENGINE -> S_IDLE

2月 22 15:48:20 gdb1 crmd[25667]: notice: State transition S_IDLE -> S_POLICY_ENGINE

2月 22 15:48:21 gdb1 pengine[25666]: warning: Blind faith: not fencing unseen nodes

2月 22 15:48:21 gdb1 pengine[25666]: notice: Delaying fencing operations until there are resources to manage

2月 22 15:48:21 gdb1 pengine[25666]: notice: Calculated transition 1, saving inputs in /var/lib/pacemaker/pengine/pe-input-1.bz2

2月 22 15:48:21 gdb1 crmd[25667]: notice: Transition 1 (Complete=0, Pending=0, Fired=0, Skipped=0, Incomplete=0, Source=/var/lib/pacemaker/pengine/pe-input-1.bz2): Complete

2月 22 15:48:21 gdb1 crmd[25667]: notice: State transition S_TRANSITION_ENGINE -> S_IDLE

[#35#root@gdb1 ~ 15:48:31]35

验证pcs集群状态正常,无异常信息输出

[#35#root@gdb1 ~ 15:48:31]35 crm_verify -L

[#36#root@gdb1 ~ 17:33:31]36

2.6 安装ldirectord(三台都做)

ldirectord下载

下载地址 https://rpm.pbone.net/info_idpl_23860919_distro_Centos_6_com_ldirectord-3.9.5- 3.1.x86_64.rpm.html

新标签打开获取到地址后,可以用迅雷下载

下载依赖包ipvsadm

[#10#root@gdb1 ~ 19:51:20]10 wget http://mirror.centos.org/altarch/7/os/aarch64/Packages/ipvsadm-1.27-8.el7.aarch64.rpm

执行安装,如果安装过程中,还需要其他依赖,需要自行处理

[#11#root@gdb1 ~ 19:51:29]11 yum -y install ldirectord-3.9.5-3.1.x86_64.rpm ipvsadm-1.27-8.el7.aarch64.rpm

创建配置文件/etc/ha.d/ldirectord.cf,编写内容如下

checktimeout=3

checkinterval=1

autoreload=yes

logfile="/var/log/ldirectord.log"

quiescent=no

virtual=172.17.129.1:6446

real=172.17.140.25:6446 gate

real=172.17.140.24:6446 gate

real=172.17.139.164:6446 gate

scheduler=rr

service=mysql

protocol=tcp

checkport=6446

checktype=connect

login="root"

passwd="Abc1234567*"

database="information_schema"

request="SELECT 1"

virtual=172.17.129.1:6447

real=172.17.140.25:6447 gate

real=172.17.140.24:6447 gate

real=172.17.139.164:6447 gate

scheduler=rr

service=mysql

protocol=tcp

checkport=6447

checktype=connect

login="root"

passwd="Abc1234567*"

database="information_schema"

request="SELECT 1"

参数说明

checktimeout=3:后端服务器健康检查等待时间checkinterval=5:两次检查间隔时间autoreload=yes:自动添加或者移除真实服务器logfile="/var/log/ldirectord.log":日志文件全路径quiescent=no:故障时移除服务器的时候中断所有连接virtual=172.17.129.1:6446:VIPreal=172.17.140.25:6446 gate:真实服务器scheduler=rr:指定调度算法:rr为轮询,wrr为带权重的轮询service=mysql:健康检测真实服务器时ldirectord使用的服务protocol=tcp:服务协议checktype=connect:ldirectord守护进程使用什么方法监视真实服务器checkport=16310:健康检测使用的端口login="root":健康检测使用的用户名passwd="a123456":健康检测使用的密码database="information_schema":健康检测访问的默认databaserequest="SELECT1":健康检测执行的检测命令

将编写好的配置文件,分发到另外两个服务器

[#22#root@gdb1 ~ 20:51:57]22 cd /etc/ha.d/ [#23#root@gdb1 /etc/ha.d 20:52:17]23 scp ldirectord.cf gdb2:`pwd` ldirectord.cf 100% 1300 1.1MB/s 00:00 [#24#root@gdb1 /etc/ha.d 20:52:26]24 scp ldirectord.cf gdb3:`pwd` ldirectord.cf 100% 1300 1.4MB/s 00:00 [#25#root@gdb1 /etc/ha.d 20:52:29]25

2.7 配置回环网卡上配置VIP(三台都做)

此操作用于pcs内部负载均衡,在lo网卡上配置VIP用于pcs cluster内部通信,如果不操作,则无法进行负载均衡,脚本内容如下vip.sh,放在mysql_bin目录即可

#!/bin/bash

. /etc/init.d/functions

SNS_VIP=172.16.50.161

case "$1" in

start)

ifconfig lo:0 $SNS_VIP netmask 255.255.240.0 broadcast $SNS_VIP

# /sbin/route add -host $SNS_VIP dev lo:0

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p >/dev/null 2>&1

echo "RealServer Start OK"

;;

stop)

ifconfig lo:0 down

# route del $SNS_VIP >/dev/null 2>&1

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "RealServer Stoped"

;;

*)

echo "Usage: $0 {start|stop}"

exit 1

esac

exit 0

启动配置

# sh vip.sh start

停止配置

# sh vip.sh stop

2.8 集群资源添加(任意节点执行即可)

pcs中添加vip资源

[#6#root@gdb1 ~ 11:27:30]6 pcs resource create vip --disabled ocf:heartbeat:IPaddr nic=eth0 ip=172.17.129.1 cidr_netmask=24 broadcast=172.17.143.255 op monitor interval=5s timeout=20s

命令解析

pcs resource create:pcs创建资源对象的起始命令lvs: 虚拟IP(VIP)资源对象的名称,可以根据需要自定义--disable: 表示在创建资源对象时将其禁用。这是为了避免资源在尚未完全配置的情况下被Pacemaker集群所使用ocf:heartbeat:ldirectord:告诉Pacemaker使用Heartbeat插件(即ocf:heartbeat)中的ldirectord插件来管理LVS的负载均衡器,使用的配置文件为上面配置的/etc/ha.d/ldirectord.cfop monitor interval=10s timeout=10s:定义了用于监视这个LVS资源的操作。interval=10s表示Pacemaker将每10秒检查一次资源的状态,timeout=10s表示Pacemaker将在10秒内等待资源的响应。如果在这10秒内资源没有响应,Pacemaker将视为资源不可用。

- 创建完成后检测resource状态

[#9#root@gdb1 ~ 11:35:42]9 pcs resource show vip (ocf::heartbeat:IPaddr): Stopped (disabled) lvs (ocf::heartbeat:ldirectord): Stopped (disabled) [#10#root@gdb1 ~ 11:35:48]10

创建完成后检测resource状态

[#9#root@gdb1 ~ 11:35:42]9 pcs resource show vip (ocf::heartbeat:IPaddr): Stopped (disabled) lvs (ocf::heartbeat:ldirectord): Stopped (disabled) [#10#root@gdb1 ~ 11:35:48]10

创建resource group,并添加resource

[#10#root@gdb1 ~ 11:37:36]10 pcs resource group add dbservice vip [#11#root@gdb1 ~ 11:37:40]11 pcs resource group add dbservice lvs [#12#root@gdb1 ~ 11:37:44]12

2.9 集群启停

集群启动

启动resource

# pcs resource enable vip lvs 或者 pcs resource enable dbservice

如果之前有异常,可以通过下面的命令清理异常信息,然后再启动

# pcs resource cleanup vip # pcs resource cleanup lvs

启动状态确认,执行命令 pcs status

[#54#root@gdb1 /etc/ha.d 15:54:22]54 pcs status

Cluster name: gdb_ha

Stack: corosync

Current DC: gdb1 (version 1.1.23-1.el7_9.1-9acf116022) - partition with quorum

Last updated: Thu Feb 23 15:55:27 2023

Last change: Thu Feb 23 15:53:55 2023 by hacluster via crmd on gdb82

3 nodes configured

2 resource instances configured

Online: [ gdb1 gdb2 gdb3 ]

Full list of resources:

Resource Group: dbservice

lvs (ocf::heartbeat:ldirectord): Started gdb2

vip (ocf::heartbeat:IPaddr): Started gdb3

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[#55#root@gdb1 /etc/ha.d 15:55:27]55

输出结果说明

Cluster name: gdb_ha: 集群的名称为 gdb_ha。

Stack: corosync:该集群使用的通信协议栈为 corosync。

`Current DC: gdb3 (version 1.1.23-1.el7_9.1-9acf116022) - partition with quorum ``:当前的集群控制器(DC)为 gdb3,其版本为 1.1.23-1.el7_9.1-9acf116022,并且该节点所在的分区具有投票权。

Last updated: Thu Feb 23 15:55:27 2023:最后一次更新集群状态信息的时间为 2023 年 2 月 23 日 15:55:27。

Last change: Thu Feb 23 15:53:55 2023 by hacluster via crmd on gdb2 :最后一次更改集群配置的时间为 2023 年 2 月 23 日 15:53:55,由用户 hacluster 通过 crmd 在节点 gdb2 上执行。

3 nodes configured:该集群配置了 3 个节点。

2 resource instances configured:该集群中配置了 2 个资源实例。

Online: [ gdb1 gdb2 gdb3 ]:当前在线的节点为 gdb1、gdb2 和 gdb3。

Full list of resources:列出了该集群中所有的资源,包括资源名称、资源类型和所在节点,以及资源的启动状态和当前状态。其中,dbservice 是资源组名称,lvs 是类型为 ocf:ldirectord 的资源,vip 是类型为 ocf:IPaddr 的资源。

Daemon Status:列出了 Pacemaker 各个组件的运行状态,包括 corosync、pacemaker 和 pcsd。corosync、pacemaker 和 pcsd 均为 active/enabled 状态,表示它们都在运行并且已经启用。

在上面pcs status输出的vip Started gdb3的gdb3服务器上启动ldirectord服务

[#19#root@gdb3 ~ 11:50:51]19 systemctl start ldirectord

[#20#root@gdb3 ~ 11:50:58]20

[#20#root@gdb3 ~ 11:50:59]20 systemctl status ldirectord

● ldirectord.service - LSB: Control Linux Virtual Server via ldirectord on non-heartbeat systems

Loaded: loaded (/etc/rc.d/init.d/ldirectord; bad; vendor preset: disabled)

Active: active (running) since 四 2023-02-23 11:50:58 CST; 2s ago

Docs: man:systemd-sysv-generator(8)

Process: 1472 ExecStop=/etc/rc.d/init.d/ldirectord stop (code=exited, status=0/SUCCESS)

Process: 1479 ExecStart=/etc/rc.d/init.d/ldirectord start (code=exited, status=0/SUCCESS)

Tasks: 1

Memory: 15.8M

CGroup: /system.slice/ldirectord.service

└─1484 /usr/bin/perl -w /usr/sbin/ldirectord start

2月 23 11:50:58 gdb3 ldirectord[1479]: at /usr/sbin/ldirectord line 838.

2月 23 11:50:58 gdb3 ldirectord[1479]: Subroutine main::unpack_sockaddr_in6 redefined at /usr/share/perl5/vendor_perl/Exporter.pm line 66.

2月 23 11:50:58 gdb3 ldirectord[1479]: at /usr/sbin/ldirectord line 838.

2月 23 11:50:58 gdb3 ldirectord[1479]: Subroutine main::sockaddr_in6 redefined at /usr/share/perl5/vendor_perl/Exporter.pm line 66.

2月 23 11:50:58 gdb3 ldirectord[1479]: at /usr/sbin/ldirectord line 838.

2月 23 11:50:58 gdb3 ldirectord[1479]: Subroutine main::pack_sockaddr_in6 redefined at /usr/sbin/ldirectord line 3078.

2月 23 11:50:58 gdb3 ldirectord[1479]: Subroutine main::unpack_sockaddr_in6 redefined at /usr/sbin/ldirectord line 3078.

2月 23 11:50:58 gdb3 ldirectord[1479]: Subroutine main::sockaddr_in6 redefined at /usr/sbin/ldirectord line 3078.

2月 23 11:50:58 gdb3 ldirectord[1479]: success

2月 23 11:50:58 gdb3 systemd[1]: Started LSB: Control Linux Virtual Server via ldirectord on non-heartbeat systems.

[#21#root@gdb3 ~ 11:51:01]21

通过上述操作即完成集群启动。

集群停止停止resource

# pcs resource disable vip lvs 或者 pcs resource disable dbservice # systemctl stop corosync pacemaker pcsd ldirectord

卸载集群

# pcs cluster stop # pcs cluster destroy # systemctl stop pcsd pacemaker corosync ldirectord # systemctl disable pcsd pacemaker corosync ldirectord # yum remove -y pacemaker corosync pcs ldirectord # rm -rf /var/lib/pcsd/* /var/lib/corosync/* # rm -f /etc/ha.d/ldirectord.cf

三、高可用及负载均衡测试

在172.17.139.62上通过for循环,访问VIP,观察负载均衡情况

注意:VIP无法在real server服务器上进行访问,因此需要第4台服务器进行访问验证

# for x in {1..100}; do mysql -uroot -pAbc1234567* -h172.17.129.1 -P6446 -N -e 'select sleep(60)' 2> /dev/null & done

在pcs resource lvs运行的服务器上,执行ipvsadm -Ln

[#26#root@gdb1 ~ 15:52:28]26 ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 172.17.129.1:6446 rr -> 172.17.139.164:6446 Route 1 33 0 -> 172.17.140.24:6446 Route 1 34 0 -> 172.17.140.25:6446 Route 1 33 0 TCP 172.17.129.1:6447 rr -> 172.17.139.164:6447 Route 1 0 0 -> 172.17.140.24:6447 Route 1 0 0 -> 172.17.140.25:6447 Route 1 0 0 [#27#root@gdb1 ~ 15:52:29]27

可以看到访问被平均负载到每个服务器上了。

在每个服务器上,通过netstat -alntp| grep 172.17.139.62确认请求的存在,其中172.17.139.62是发起请求的IP地址。

[#28#root@gdb1 ~ 15:53:10]28 netstat -alntp| grep 172.17.139.62 | grep 6446 tcp 0 0 172.17.129.1:6446 172.17.139.62:54444 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54606 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54592 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54492 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54580 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54432 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54586 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54552 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54404 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54566 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54516 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54560 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54450 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54480 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54540 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54522 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54462 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54528 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54534 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54598 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54498 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54426 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54510 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54504 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54412 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54612 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54456 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54468 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54474 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54486 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54574 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54438 ESTABLISHED 1902/./mysqlrouter tcp 0 0 172.17.129.1:6446 172.17.139.62:54546 ESTABLISHED 1902/./mysqlrouter [#29#root@gdb1 ~ 15:53:13]29

停止gdb3服务器上的MySQl Router,重新发起100个新的请求,观察路由转发情况

[#29#root@gdb1 ~ 15:55:02]29 ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 172.17.129.1:6446 rr -> 172.17.140.24:6446 Route 1 0 34 -> 172.17.140.25:6446 Route 1 0 33 TCP 172.17.129.1:6447 rr -> 172.17.140.24:6447 Route 1 0 0 -> 172.17.140.25:6447 Route 1 0 0 [#30#root@gdb1 ~ 15:55:03]30 [#30#root@gdb1 ~ 15:55:21]30 ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 172.17.129.1:6446 rr -> 172.17.140.24:6446 Route 1 0 34 -> 172.17.140.25:6446 Route 1 0 33 TCP 172.17.129.1:6447 rr -> 172.17.140.24:6447 Route 1 50 0 -> 172.17.140.25:6447 Route 1 50 0 [#android31#root@gdb1 ~ 15:55:21]31

通过上述结果可以看到,gdb3服务器的MySQL Router停止后,路由规则从集群中剔除,再次发起的100个请求,平均分配到了剩下的两个服务器上,符合预期效果。

四、问题处理pcs

cluster启动异常

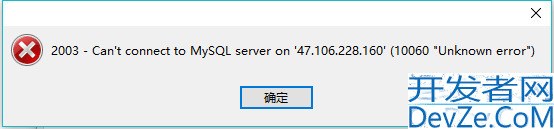

# pcs cluster start --all 报错:unable to connect to [node], try setting higher timeout in --request-timeout option

添加超时参数,再次启动

# pcs cluster start --all --request-timeout 120000 # pcs cluster enable --all

也有可能是其他节点的pcsd服务没有启动成功,启动其他节点pcsd服务后再启动pcs cluster

两个节点的pcs集群,需要关闭投票机制

# pcs property set no-quorum-policy=ignore

日志文件查看,如果启动、运行异常,可以查看下面两个日志文件,分析具体异常原因

# tail -n 30 /var/log/ldirectord.log # tail -n 30 /var/log/pacemaker.log

pcs status输出offline节点

[#4#root@gdb1 ~ 11:21:23]4 pcs status

Cluster name: db_ha_lvs

Stack: corosync

Current DC: gdb2 (version 1.1.23-1.el7_9.1-9acf116022) - partition with quorum

Last updated: Thu Mar 2 11:21:27 2023

Last change: Wed Mar 1 16:01:56 2023 by root via cibadmin on gdb1

3 nodes configured

2 resource instances configured (2 DISABLED)

Online: [ gdb1 gdb2 ]

OFFLINE: [ gdb3 ]

Full list of resources:

Resource Group: dbservice

vip (ocf::heartbeat:IPaddr): Stopped (disabled)

lvs (ocf::heartbeat:ldirectord): Stopped (disabled)

Daemon Status:

corosync: active/enabled

pacemaker: active/enabled

pcsd: active/enabled

[#5#root@gdb1 ~ 11:21:27]5

启动sh vip.sh start后节点退出集群

[#28#root@gdb3 /data/dbscale/lvs 10:06:10]28 ifconfig -a

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.139.164 netmask 255.255.240.0 broadcast 172.17.143.255

inet6 fe80::216:3eff:fe07:3778 prefixlen 64 scopeid 0x20<link>

ether 00:16:3e:07:37:78 txqueuelen 1000 (Ethernet)

RX packets 17967625 bytes 2013372790 (1.8 GiB)

RX errors 0 dropped 13 overruns 0 frame 0

TX packets 11997866 bytes 7616182902 (7.0 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 177401 bytes 16941285 (16.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 177401 bytes 16941285 (16.1 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255

ether 52:54:00:96:cf:dd txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

virbr0-nic: flags=4098<BROADCAST,MULTICAST> mtu 1500

ether 52:54:00:96:cf:dd txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

但是由于real server的是172.17.140.24、172.17.140.25、172.17.139.164,此时使用255.255.240.0无法通信,将其修改为255.255.0.0,再次启动后访问正常。

#!/bin/bash

. /etc/init.d/functions

SNS_VIP=172.17.129.1

case "$1" in

start)

ifconfig lo:0 $SNS_VIP netmask 255.255.0.0 broadcast $SNS_VIP

# /sbin/route add -host $SNS_VIP dev lo:0

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce

sysctl -p >/dev/null 2>&1

echo "RealServer Start OK"

;;

stop)

ifconfig lo:0 down

# route del $SNS_VIP >/dev/null 2>&1

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce

echo "RealServer Stoped"

;;

*)

echo "Usage: $0 {start|stop}"

exit 1

esac

exit 0

Enjoy GreatSQL

关于 GreatSQL

GreatSQL是由万里数据库维护的MySQL分支,专注于提升MGR可靠性及性能,支持InnoDB并行查询特性,是适用于金融级应用的MySQL分支版本。

到此这篇关于MySQL Router高可用搭建的文章就介绍到这了,更多相关MySQL Router高可用内容请搜索我们以前的文章或继续浏览下面的相关文章希望大家以后多多支持我们!

加载中,请稍侯......

加载中,请稍侯......

精彩评论