Big-Oh : How can O(n) + O(n) + ... + O(n) be equal to O(n^2)?

I'm having a hard time understanding the following statements from Algorithms by S. Dasgupta, C.H. Papadimitriou, and U.V. Vazirani - page 24 that they represent the sum of O(n) as O(n2). But my understanding of O(n) is a linear function of n, and it can never be quadratic no matter how many times the linear functions are added (for any given n). They are giving the explanation lik开发者_开发百科e below for the example of 13 x 11 in binary notation.

1 1 0 1

x 1 0 1 1

----------

1 1 0 1 (1101 times 1)

1 1 0 1 (1101 times 1, shifted once)

0 0 0 0 (1101 times 0, shifted twice)

+ 1 1 0 1 (1101 times 1, shifted thrice)

----------------

1 0 0 0 1 1 1 1 (binary 143)

If x and y (1101 and 1011 here) are both n bits, then there are n intermediate rows, with lengths of up to 2n bits (taking the shifting into account). The total time taken to add up these rows, doing two numbers at a time, is O(n) + O(n) + ... + O(n), which is O(n2), quadratic in the size of the inputs.

Sorry if this is obvious, but could somebody please help me understand why this is O(n2)?

If there are n operations that have a complexity of O(n), then the total complexity is n·O(n) which is O(n2).

If you do something which will take N seconds, and repeat that N times. How many seconds will it take to finish?

N = 2 => 2*2 seconds.

N = 3 => 3*3 seconds.

N = 4 => 4*4 seconds.

=> N^2 seconds.

Something that is O(n) isn't O(n2)** if it's multiplied by a constant factor.

n is O(n)

7n is O(n)

100000n is O(n)

n*n is O(n^2)

Here's the formal definition of big-O, quoted from Wikipedia:

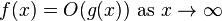

Let f(x) and g(x) be two functions defined on some subset of the real numbers. One writes

if and only if, for sufficiently large values of x, f(x) is at most a constant multiplied by g(x) in absolute value. That is, f(x) = O(g(x)) if and only if there exists a positive real number M and a real number x0 such that

** WARNING: Big O is an upper boundary. Everything that's O(n) is also technically O(n2). See Big Theta and Big Omega for distinction.

http://en.wikipedia.org/wiki/Big_O_notation

When you say "any given n," you're forgetting that when when the "given n" is n itself, then you're doing an O(n) operation n times. That's n2.

If you have an operation which is O(n) and you do it n times, that is the definition of O(n^2).

You are getting confused with a constant number of O(n) operations which is always O(n).

In the binary multiplication example, the nmber of O(n) operations depends on the length of the input, n.

A O(n) operation done n times will be O(n^2). More strictly if the number of O(n) operations is linearly dependent on the input size, n, then you will have a case of O(n^2).

Many answers here forgot about important assumption. If you have n operations, each is O(n) it does not automatically follow that the sum is O(n2)! Say k-th operation takes k*n time (so it is constant times n) - first operation takes n time, second 2*n etc. Then the sum of first n operations is O(n3).

For the disbelievers, here's an example of that misuse, from CLRS:

False claim:

n

Σ k = O(n)

i=1

Proof by induction:

For n=1, 1 = O(1).

For n+1, assuming hypothesis holds for n,

n+1 n

Σ k = ( Σ k) + (n+1) = O(n) + n = O(n)

i=1 i=1

The "proof" is wrong, the sum is O(n2).

You can only say that O(n) + ... + O(n) is O(n2) if the constants hidden in the big O are all bounded by some constant. In that case, you can write

O(n) + ... + O(n) <= kn + kn + ... + kn <= kn2 = O(n2).

If the constants aren't bounded, this is wrong.

Basic idea: because the constant factor hidden in the O(n) would increase as n increases, and would therefore be not-constant and create a contradiction.

One of the downsides of the Big-O notation is that it encourages misconceptions like the subject of your question. O(n) + O(n) suggests you are adding the class of linear functions to itself, but what it really means is "the class of the sum of any two linear functions". The sum is linear again, which is nice, but that result happens to depend on there being only two (or any constant number) of summed linear functions.

So, in context, your question actually means 'why is the sum of increasing numbers of linear functions not also linear?'. The proof sketch is pretty simple:

'

- Assume, for simplicity, that all linear functions are of the form f(n) = c*n, c >= 1

- Suppose we have an increasing-as-N-increases set of summed linear functions

- Assume the sum of that set of functions is linear, ie. of the form c*n

- Try to find a value of c that works for all values of N

- But, for any c that works for N=x, it will fail for N=x+1 because there is another addition

- Contradiction

- The sum of the set of functions is not linear

You have to add two n-sized numbers (taking O(n) time), n times. n(O(n)) = O(n*n) = O(n^2).

The answer is very easy:

O(n) + O(n) + ・・・+ O(n) = X(n)

If X is constant and does not change with input then it is still O(n).

加载中,请稍侯......

加载中,请稍侯......

精彩评论