Sobel Edge Detection in Android

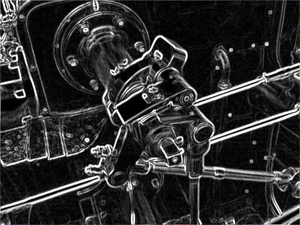

As part of an application that I'm developing for Android I'd like to show the user an edge-detected version of an image they have taken (something similar to the example below).

开发者_如何学PythonTo achieve this I've been looking at the Sobel operator and how to implement it in Java. However, many of the examples that I've found make use of objects and methods found in AWT (like this example) that isn't part of Android.

My question is then really, does Android provide any alternatives to the features of AWT that have been used in the above example? If we were to rewrite that example just using the libraries built into Android, how would we go about it?

The question and answer are 3 years old... @reflog's solution works for a simple task like edge detection, but it's slow.

I use GPUImage on iOS for edge detection task. There is a equivalent library on Android: https://github.com/CyberAgent/android-gpuimage/tree/master

It's hardware accelerated so it's supposed to be very fast. Here is the sobel edge detection filter: https://github.com/CyberAgent/android-gpuimage/blob/master/library/src/jp/co/cyberagent/android/gpuimage/GPUImageSobelEdgeDetection.java

According the doc, you can simply do this:

Uri imageUri = ...;

mGPUImage = new GPUImage(this);

mGPUImage.setGLSurfaceView((GLSurfaceView) findViewById(R.id.surfaceView));

mGPUImage.setImage(imageUri); // this loads image on the current thread, should be run in a thread

mGPUImage.setFilter(new GPUImageSobelEdgeDetection());

// Later when image should be saved saved:

mGPUImage.saveToPictures("GPUImage", "ImageWithFilter.jpg", null);

Another option is using RenderScript, which you can access each pixel in parallel and do whatever you want with it. I don't see any image processing library built with that yet.

since you don't have BufferedImage in Android, you can do all the basic operations yourself:

Bitmap b = ...

width = b.getWidth();

height = b.getHeight();

stride = b.getRowBytes();

for(int x=0;x<b.getWidth();x++)

for(int y=0;y<b.getHeight();y++)

{

int pixel = b.getPixel(x, y);

// you have the source pixel, now transform it and write to destination

}

as you can see, this covers almost everything you need for porting that AWT example. (just change the 'convolvePixel' function)

Another option is to use OpenCV, which has a great implementation for Android.

The Imgproc.Sobel() method takes an image in the form of a 'Mat' type, which is easily loaded from a resource or bitmap. The input Mat should be a grayscale image, which can also be created with opencv.

Mat src = Highgui.imread(getClass().getResource(

"/SomeGrayScaleImage.jpg").getPath());

Then run sobel edge detector on it, saving results in a new Mat. If you want to keep the same image depth, then this will do it...

Mat dst;

int ddepth = -1; // destination depth. -1 maintains existing depth from source

int dx = 1;

int dy = 1;

Imgproc.Sobel(src, dst, ddepth, dx, dy);

Some reference documentation is here: http://docs.opencv.org/java/org/opencv/imgproc/Imgproc.html#Sobel(org.opencv.core.Mat,%20org.opencv.core.Mat,%20int,%20int,%20int)

For a gradle build in Android Studio, you can pull in the opencv library built for Java from different places, but I also host a recent build. In your build.gradle file, you can add a dependency like so... Otherwise, it's a little tricky.

dependencies {

compile 'com.iparse.android:opencv:2.4.8'

}

If you're using Eclipse, you can check the Opencv website for details on using Opencv on Android: http://opencv.org/platforms/android.html

Check a java implementation here:

http://code.google.com/p/kanzi/source/browse/java/src/kanzi/filter/SobelFilter.java

There is no dependency on Swing/AWT or any other library. It operates directly on the image pixels and it is fast.

The results can be seen here (scroll down):

http://code.google.com/p/kanzi/wiki/Overview

加载中,请稍侯......

加载中,请稍侯......

精彩评论