LINQ and SQL Server performance tuning the SQL Server 2008 database best practice?

My question is what is best practice to optimize performance using LINQ for SQL And performance is response time out in the user interface.

Right now I have some sales data in a SQL Server 2008 database and I display this data (MAT, yearly, in different segments, growth in segment, percent of market growth ,,,,) in charts in a ASP.NET application using LINQ for SQL to constructs Iquerable expressions that are executed

I see the challenge that I have a database and used LINQ to construct all questions and I have no control 开发者_如何学Cwhat SQL is created ( I can track it but ,,,,) and I don't use Stored Procedures so how my data is fetched is like a black box.

Right now I run some unit tests and manual test the application and use the Databasse Engine Tuning Advisor what indexes etc to create....

In addition to that, I'll usually use both SQL profiler and CLR profiler with some simulated users on a large-ish data set, and watch for long-running queries and/or long-running calls through the datacontext (which may signify multiple round-trips happening under the covers). My personal preference is also to disable deferred loading and object tracking on all my datacontexts by default, so I have to opt-IN to multiple round-trips in most cases. While you can't directly affect the SQL that's generated, you can be careful with LoadWith/AssociateWith and make sure that you're not fetching horribly large/inefficient result sets, and break up queries that have lots of expensive joins (sometimes multiple round-trips are cheaper than mondo joins on big tables).

It's all about measurement- use whatever tools you can get your hands on.

Profiling, profiling, profiling. :)

Measure not only timings, but pay attention to I/O as well. A frequently executed query that is I/O intensive can execute fast due to caching, but can in turn have a negative effect on the overall db-server performance since there will be less resources available for other queries.

As you say, L2S can be a bit of a black box, so you need to try to replicate all scenarios and/or profile while the app is in use by real users. Then use that to 1) tweak queries 2) add indexes 3) make any other changes needed to get the performance you need.

I have a profiling tool made specifically for Linq-to-SQL to make it a little bit 'less black box' - it allows you to do runtime profiling while tying the generated queries to the code (call stack) that resulted in a specific query being executed. You can download it and get a free trial license at http://www.huagati.com/L2SProfiler/

The background reason for my profiler is outlined in a bit more detail here: http://huagati.blogspot.com/2009/06/profiling-linq-to-sql-applications.html

...and some advanced profiling options are covered here: http://huagati.blogspot.com/2009/08/walkthrough-of-newest-filters-and.html

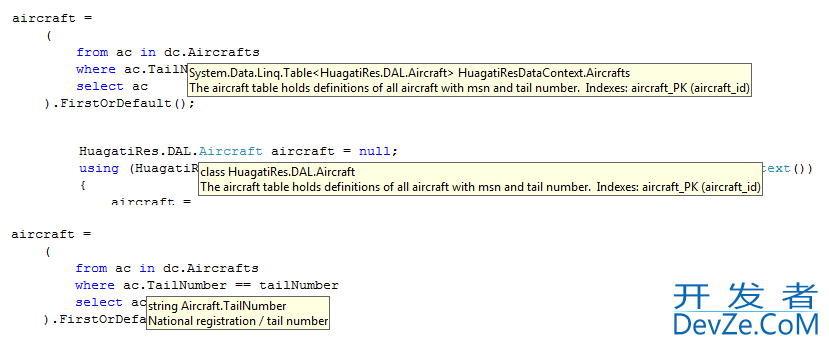

Another thing that may help if you have a lot of tables with a lot of columns is to get index info into the code editor. This is done by adding xml doc-comments with that info to the entity classes and member properties; that info is then displayed in the VS code editor's tooltips:

...that way you can see already while typing queries if there is an index covering the column(s) used in where clauses etc. To avoid having to type all of that in, I have created a tool for that too. See the 'update documentation' feature in http://www.huagati.com/dbmltools/

加载中,请稍侯......

加载中,请稍侯......

精彩评论