How does Google Know you are Cloaking?

I can't seem to find any information on how google determines if you are cloaking your content. How, from a technical standpoint, do you think they are determining this? Are they sending in things other than the googlebot and comparing it to the googlebot results? Do they have a team of human beings comparing? Or can they somehow tell that you have checked the user agent and executed a different code path because you saw "googlebot" in the name?

It's in relation to this question on legitimate url cloaking for seo. If textual content is exactly the same, but the rendering is different (1995-style html vs. ajax vs. flash), is there really a problem开发者_JAVA百科 with cloaking?

Thanks for your put on this one.

As far as I know, how Google prepares search engine results is secret and constantly changing. Spoofing different user-agents is easy, so they might do that. They also might, in the case of Javascript, actually render partial or entire pages. "Do they have a team of human beings comparing?" This is doubtful. A lot has been written on Google's crawling strategies including this, but if humans are involved, they're only called in for specific cases. I even doubt this: any person-power spent is probably spent by tweaking the crawling engine.

Google looks at your site while presenting user-agent's other than googlebot.

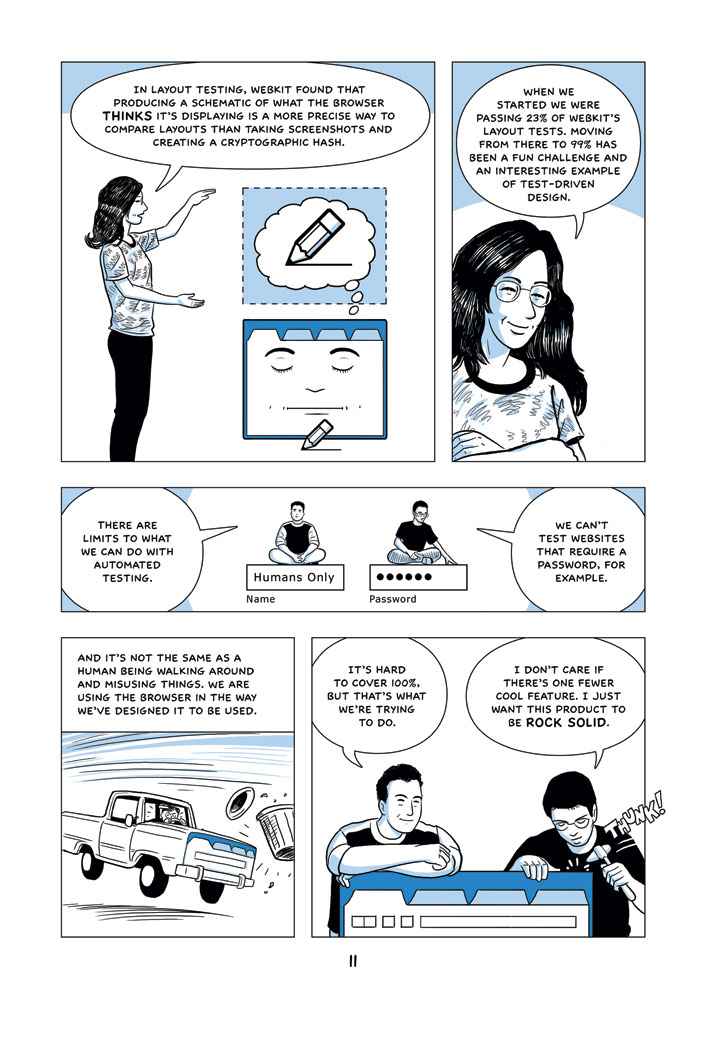

See the Google Chrome comic book page 11 where it describes (even better than layman's terms) about how a Google tool can take a schematic of a web page. They could be using this or similar technology for Google search indexing and cloak detection - at least that would be another good use for it.

Google does hire contractors (indirectly, through an outside agency, for very low pay) to manually review documents returned as search results and judge their relevance to the search terms, quality of translations, etc. I highly doubt that this is their only tool for detecting cloaking, but it is one of them.

In reality, many of Google's algos are trivially reversed and are far from rocket science. In the case of, so called, "cloaking detection" all of the previous guesses are on the money (apart from, somewhat ironically, John K lol) If you don't believe me set up some test sites (inputs) and some 'cloaking test cases' (further inputs), submit your sites to uncle Google (processing) and test your non-assumptions via pseudo-advanced human-based cognitive correlationary quantum perceptions (<-- btw, i made that up for entertainment value (and now i'm nesting parentheses to really mess with your mind :)) AKA "checking google resuts to see if you are banned yet" (outputs). Loop until enlightenment == True (noob!) lol

A very simple test would be to compare the file size of a webpage the Googlbot saw against the file size of the page scanned by an alias user of Google that looks like a normal user.

This would detect most suspect candidates for closeer examination.

They call your page using tools like curl and they construct a hash based on the page without the user agent, then they construct another hash with the googlebot user-agent. Both hashes must be similars, they have algorithms to check the hashes and know if its cloaking or not

加载中,请稍侯......

加载中,请稍侯......

精彩评论