Apache crashes in a loop of string concatenation

I have a script that opens a huge XLSX file and reads 3000 rows of data, saving it to a two dimensional array. Of all places for Apache to crash, it does so in a simple loop that builds a MySQL query. I know this because if I r开发者_如何学Goemove the following lines from my application, it runs without issue:

$query = "INSERT INTO `map.lmds.dots` VALUES";

foreach($data as $i => $row)

{

$id = $row["Abonnementsid"];

$eier = $row["Eier"];

$status = $row["Status"];

if($i !== 0) $query .= "\n,";

$query .= "('$id', '$eier', '$status', '0', '0')";

}

echo $query;

I can't see a thing wrong with the code.

I'm using PHPExcel and dBug.php

Why is this script crashing Apache?

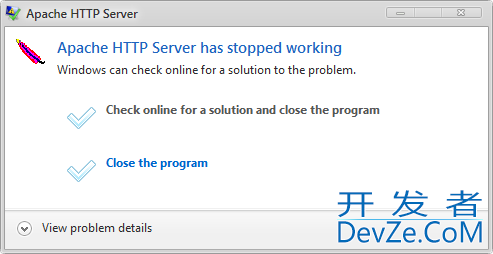

EDIT: Perhaps I should elaborate on what I mean by crash. I mean a classic Windows "Program has stopped working":

EDIT: Another attempt inspired by one of the answers. Apache still crashes:

$query = "INSERT INTO `map.lmds.dots` VALUES";

$records = array();

foreach($data as $i => &$row)

{

$id = $row["Abonnementsid"];

$eier = $row["Eier"];

$status = $row["Status"];

$records[] = "('$id', '$eier', '$status', '0', '0')";

}

echo $query . implode(",", $records);

EDIT: I have narrowed it down further. As soon as I add a foreach loop, Apache crashes.

foreach($data as $i => $row) {};

Like the others respondents have said this is most likely a memory issue, you should check both your Apache error logs and your PHP error logs for more info.

Assuming this is a memory problem, I suggest you change your code so that you execute multiple insert statements inside the foreach loop rather than storing the whole thing in a big string and sending it to the database all at once. Of course, this means that you're making 3000+ calls to the database rather than just one, I'd expect this to be a bit slower, you can mitigate this problem by using a prepared statement which should be a bit more efficient. If this is still too slow, try changing your loop so that you only call the database every N times round the loop.

The amount of string concatenation and the amount of string data involved could be too much to handle at once during the permitted execution time.

You could try to just collect the values in an array and put them together at the end:

$query = "INSERT INTO `map.lmds.dots` VALUES";

$records = array();

foreach($data as $i => $row) {

$records[] = "('".mysql_real_escape_string($row["Abonnementsid"])."', '".mysql_real_escape_string($row["Eier"])."', '".mysql_real_escape_string($row["Status"])."', '0', '0')";

}

$query .= implode("\n,", $records);

Or insert the records in chunks:

$query = "INSERT INTO `map.lmds.dots` VALUES";

$records = array();

foreach($data as $i => $row) {

$records[] = "('".mysql_real_escape_string($row["Abonnementsid"])."', '".mysql_real_escape_string($row["Eier"])."', '".mysql_real_escape_string($row["Status"])."', '0', '0')";

if ($i % 1000 === 999) {

mysql_query($query . implode("\n,", $records));

$records = array();

}

}

if (!empty($records)) {

mysql_query($query . implode("\n,", $records));

}

Also try it with reference in foreach to avoid that an internal copy of the array is made:

foreach($data as $i => &$row) {

// …

}

This does sounds like a memory issue. Maybe it has nothing to do with the loop building the SQL query. It could be related to reading the "very" large file before that. And the loop pushing memory usage over the limit. Did you try freeing up memory after reading the file?

You can use memory_get_peak_usage() and memory_get_usage() to get some more info about consumed memory.

If that doesn't solve your issue. Install a debugger like Xdebug or Zend Debugger and do some profiling.

Alright, it turns out that updating PHP from 5.3.1 to 5.3.5 made the problem go away. I still have no idea as to what made PHP crash in the first place, but I suppose my PHP could simply have been broken and in need of a reinstall.

加载中,请稍侯......

加载中,请稍侯......

精彩评论